- A.O.V.

- ACES

- ALPHA CHANNEL

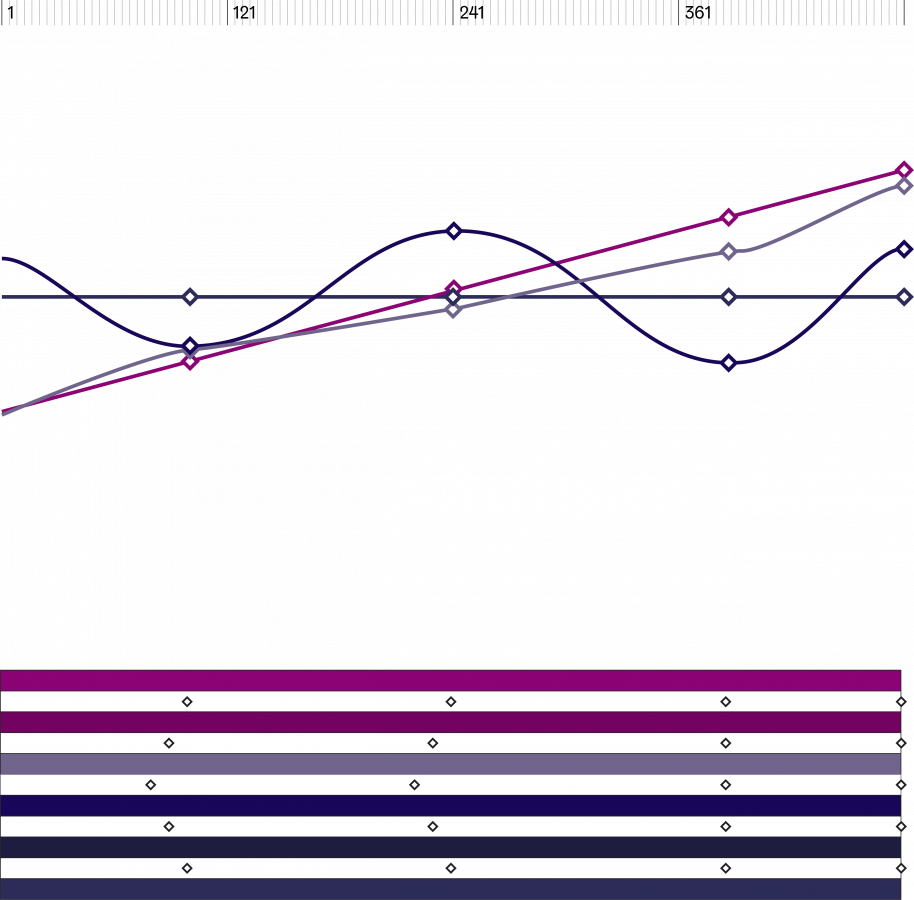

- ANIMATION

- ANTICIPATION

- APPROVAL

- ARTIFACT

- ARTIFICIAL INTELLIGENCE (A.I.)

- ASPECT RATIO

- ASSET

- AUGMENTED REALITY (A.R.)

- C.G.I.

- CHAT GPT

- CHROMA KEY

- CHROME BALL / GREY BALL

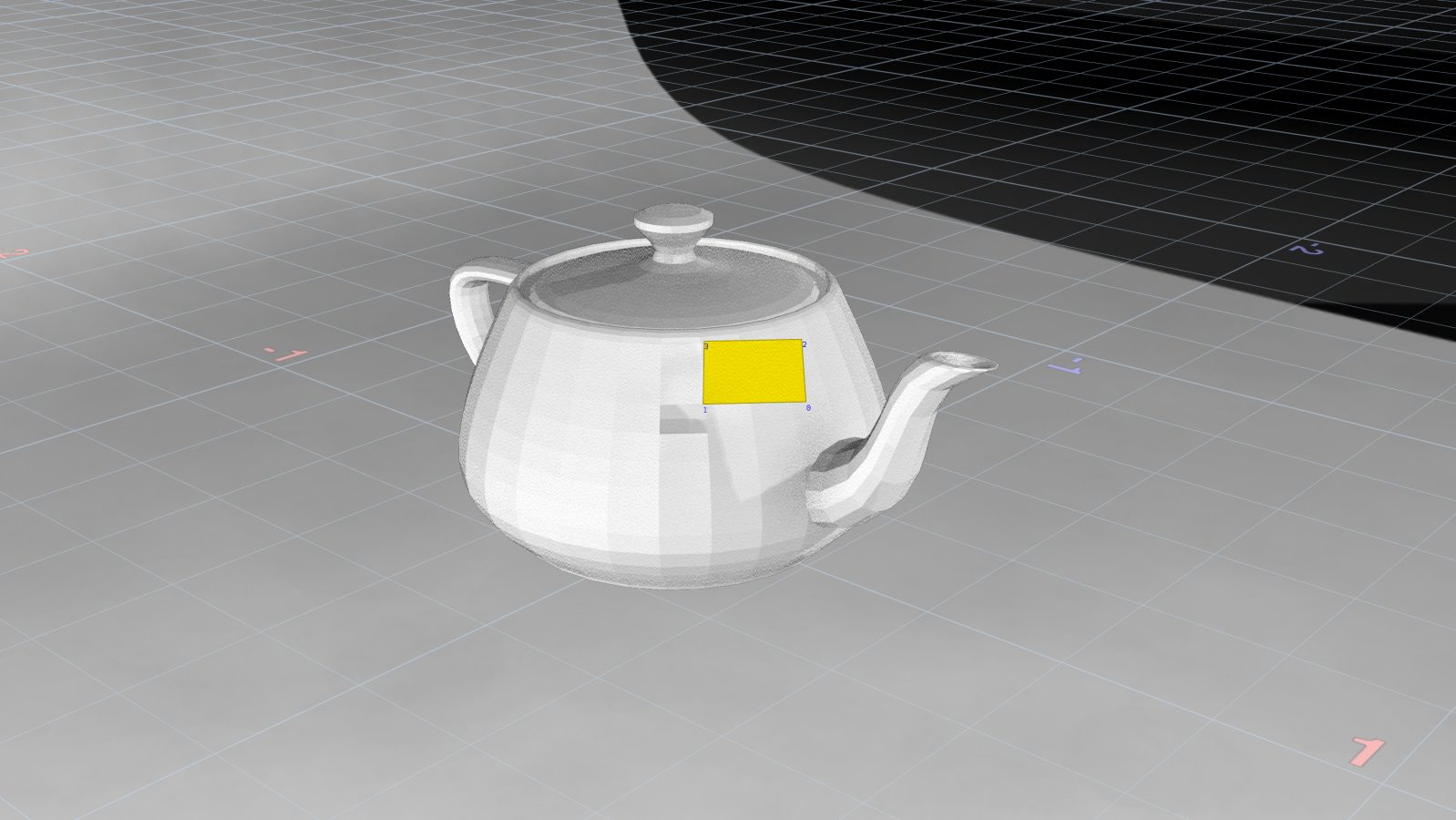

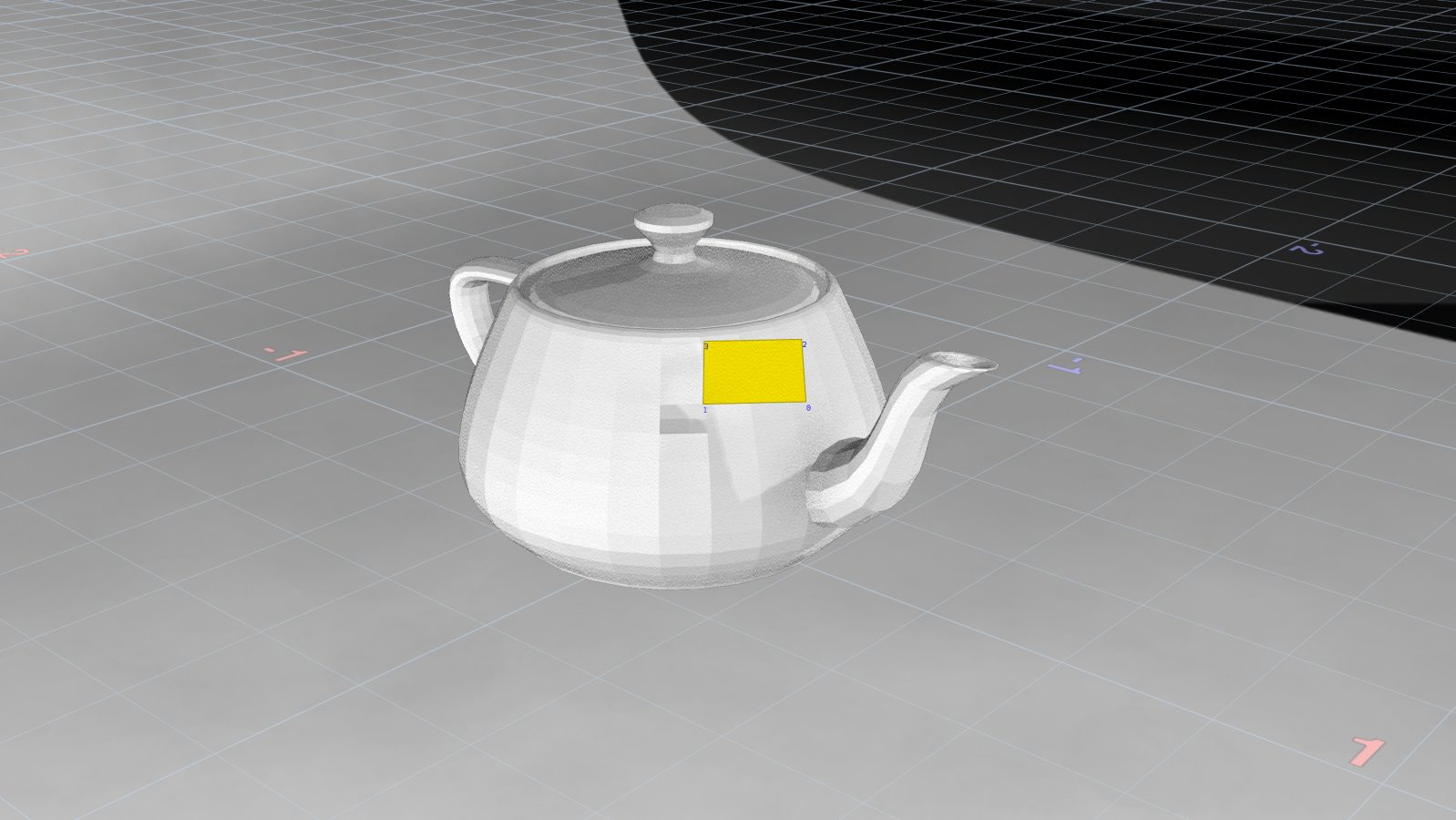

- CLAY RENDER

- CLEAN-UP

- COLOR CHART

- COMPOSITING

- CORPORATE IDENTITY (C.I.)

- CROPPING

- CUTDOWN

- DAILIES

- DATAMOSHING

- DAY FOR NIGHT

- DEEP COMPOSITING

- DEFORMER

- DELIVERY

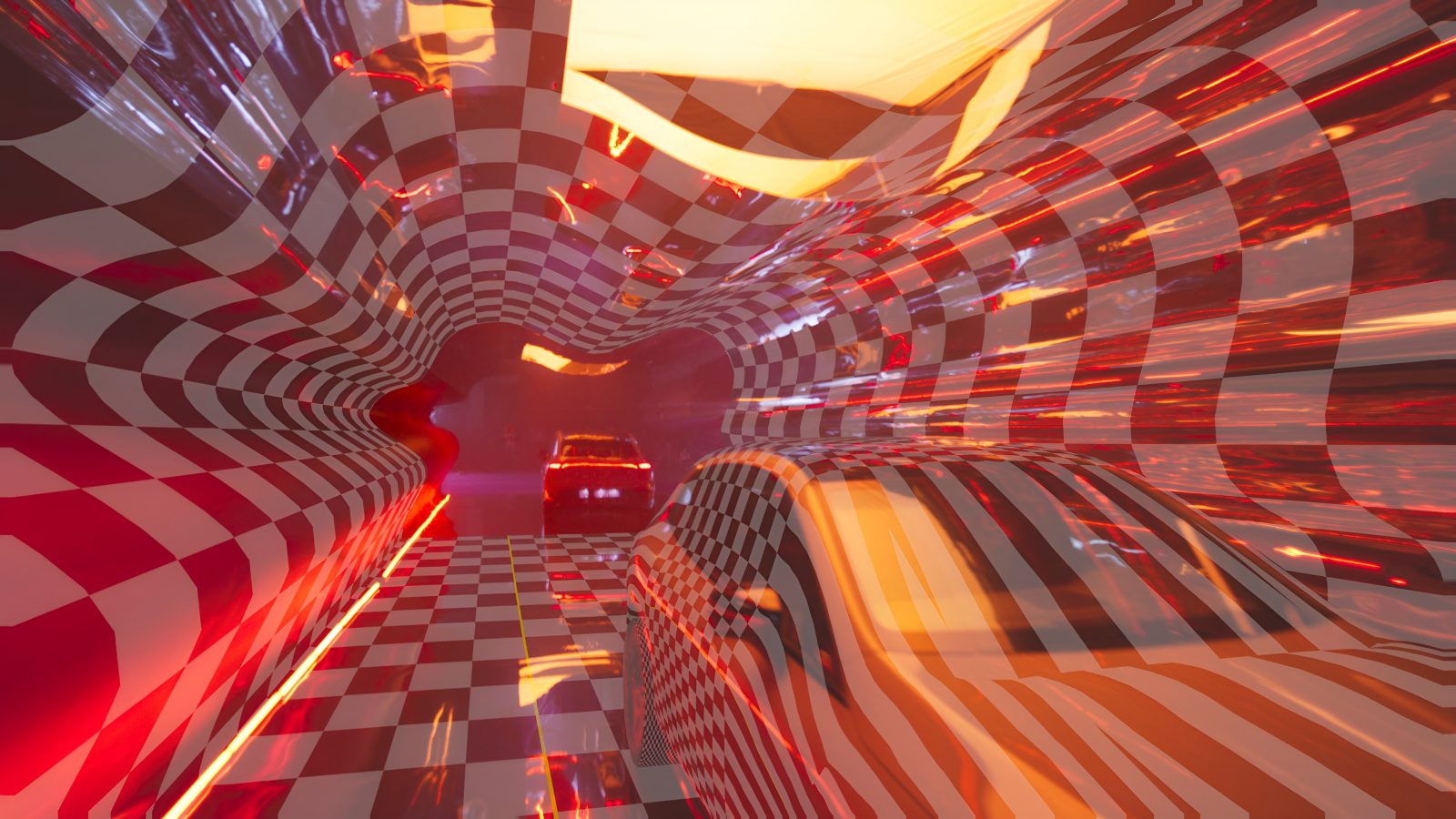

- DIRTY 3D

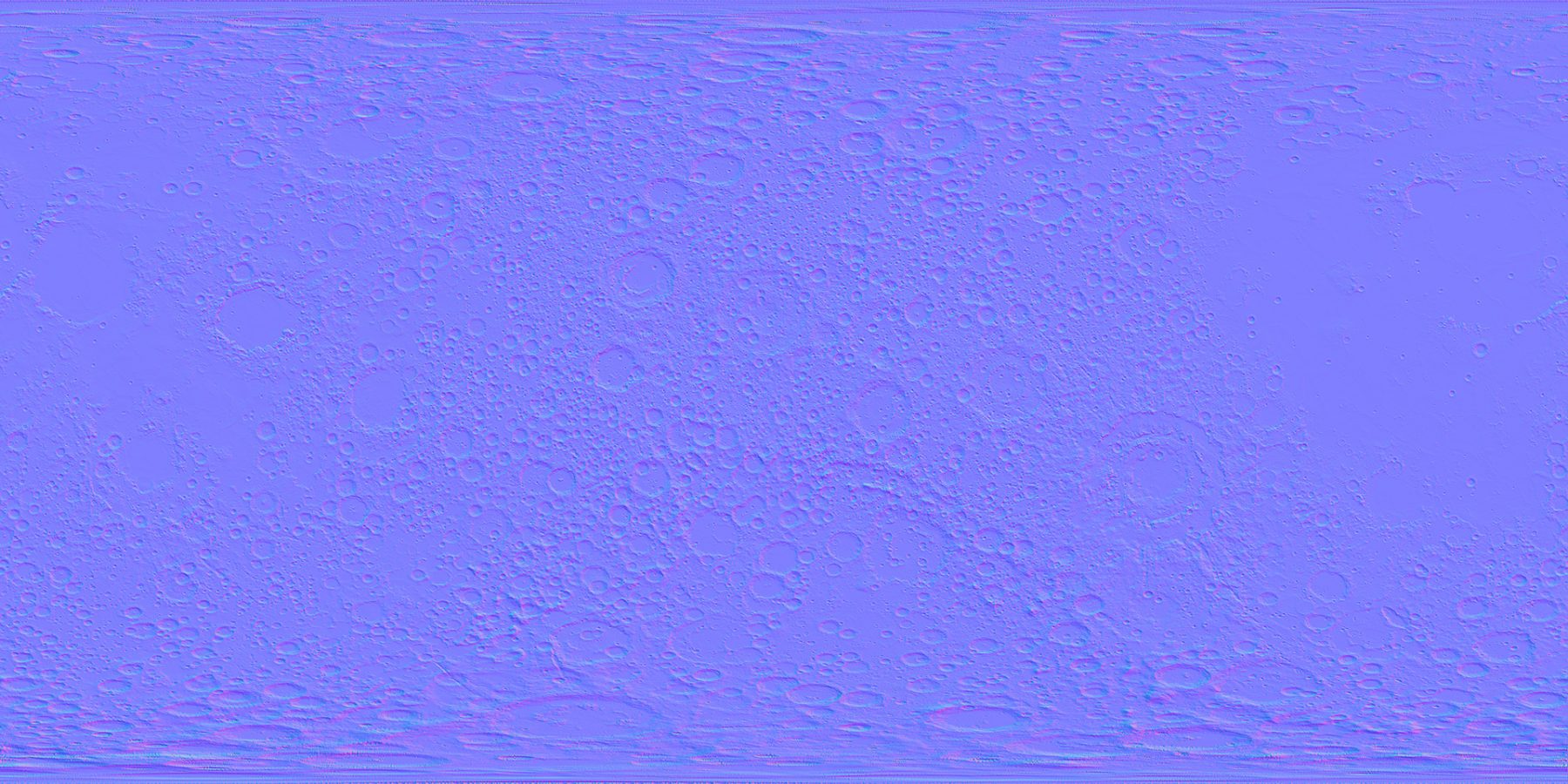

- DISPLACEMENT

- DYNAMIC FX

- DYNAMIC RANGE

- LAYER

- LAYOUT

- LENS FLARE

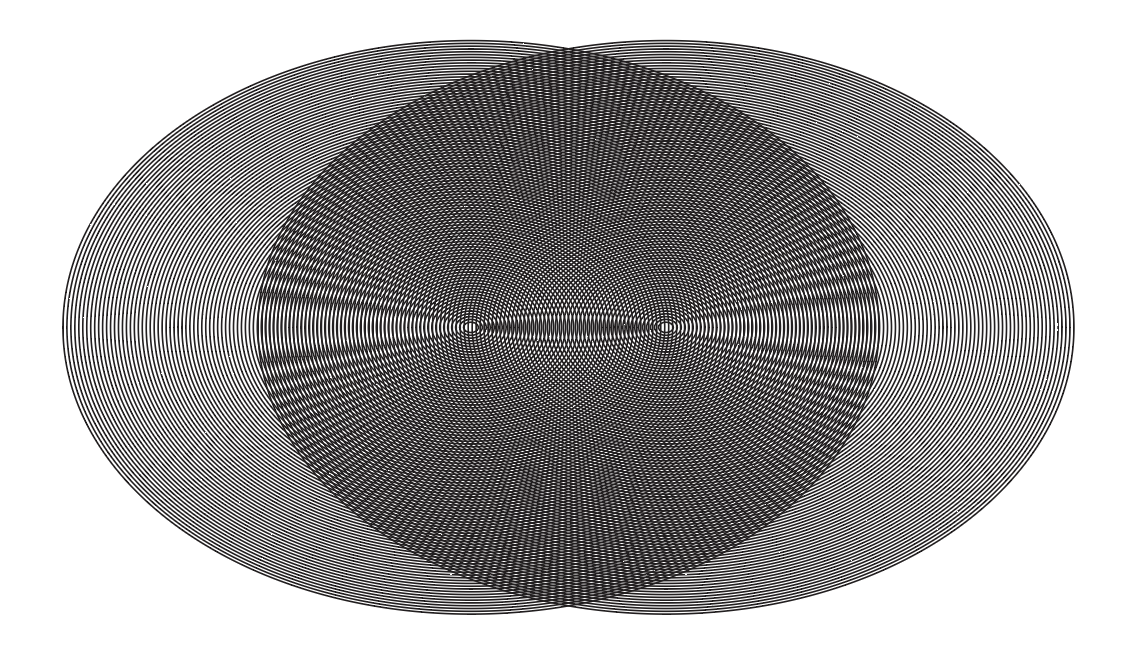

- LENS GRID

- LETTERBOX

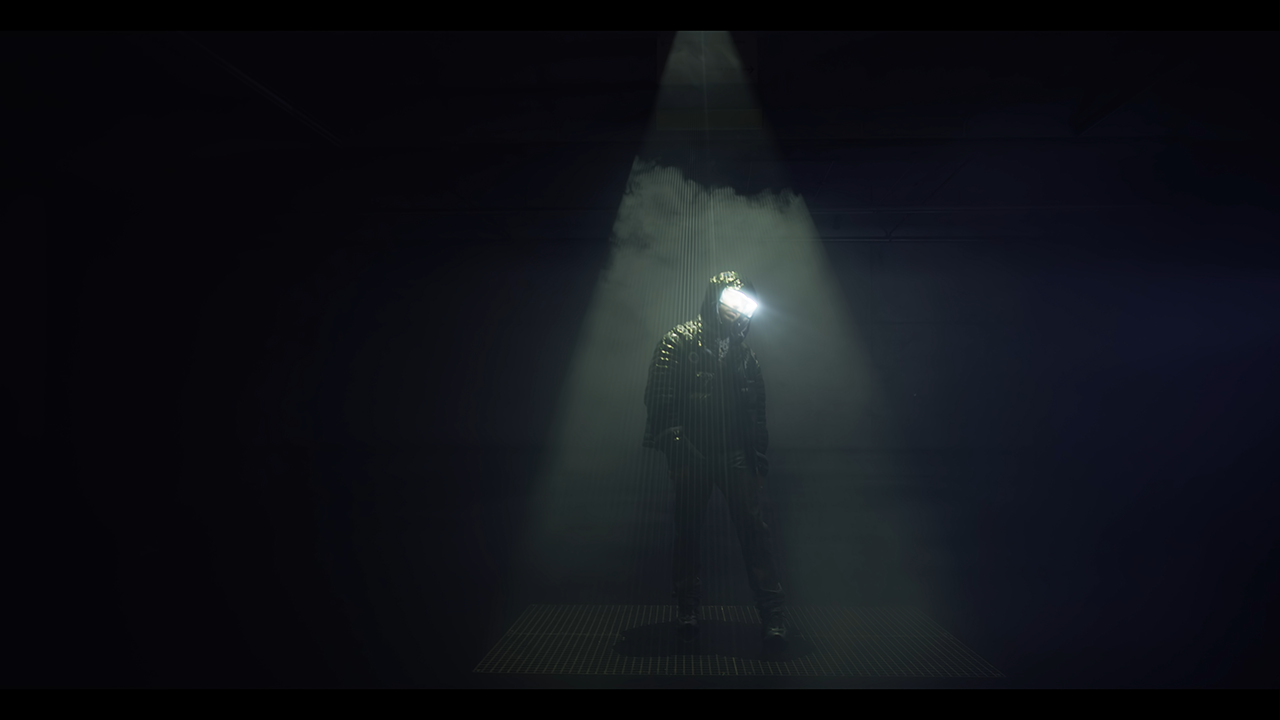

- LIGHTING

- LOCKED CAM

- LOOK DEVELOPMENT

- LOOKUP TABLES (L.U.T.)

- LUMA KEY

- MATCH MOVE

- MATTE PAINTING

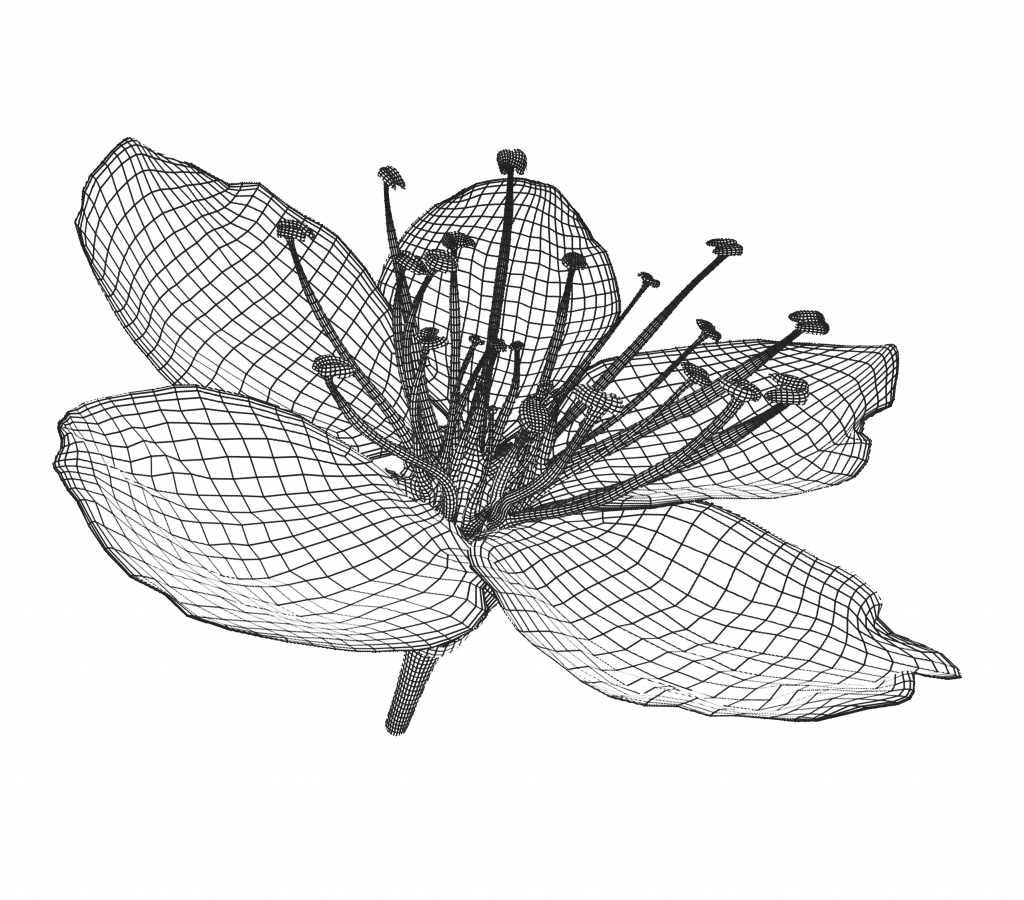

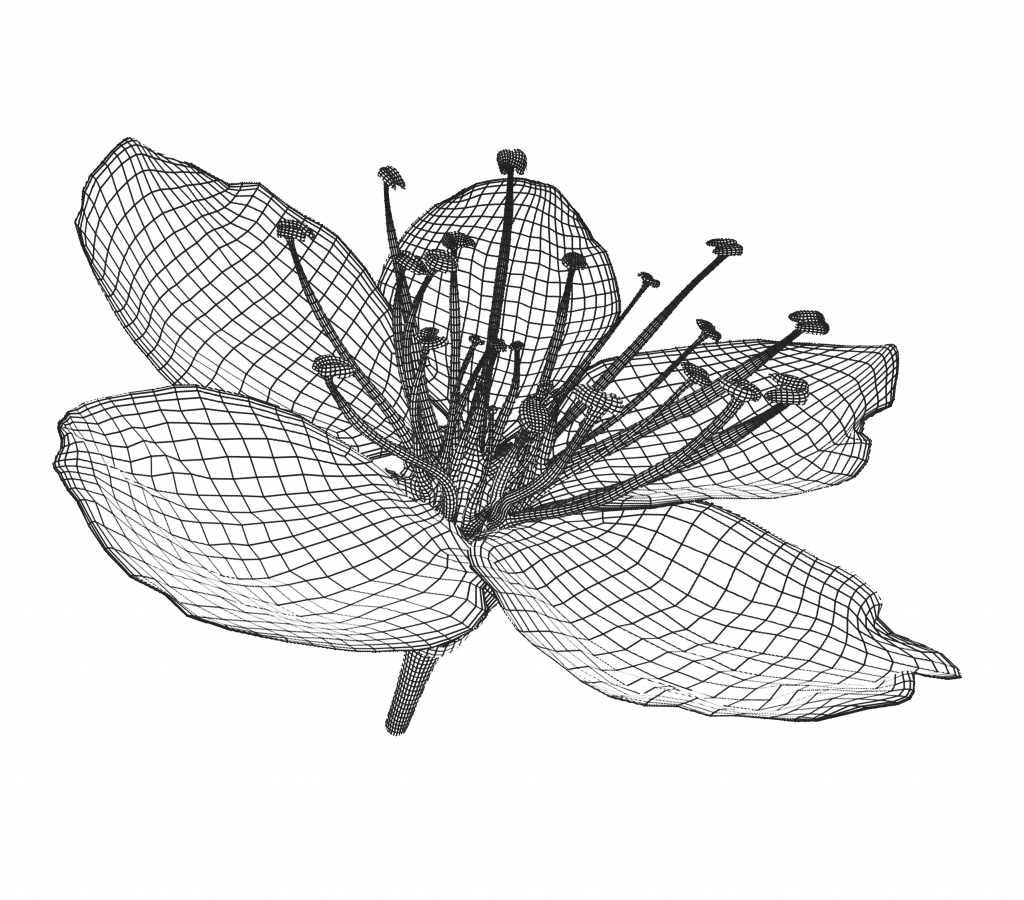

- MESH

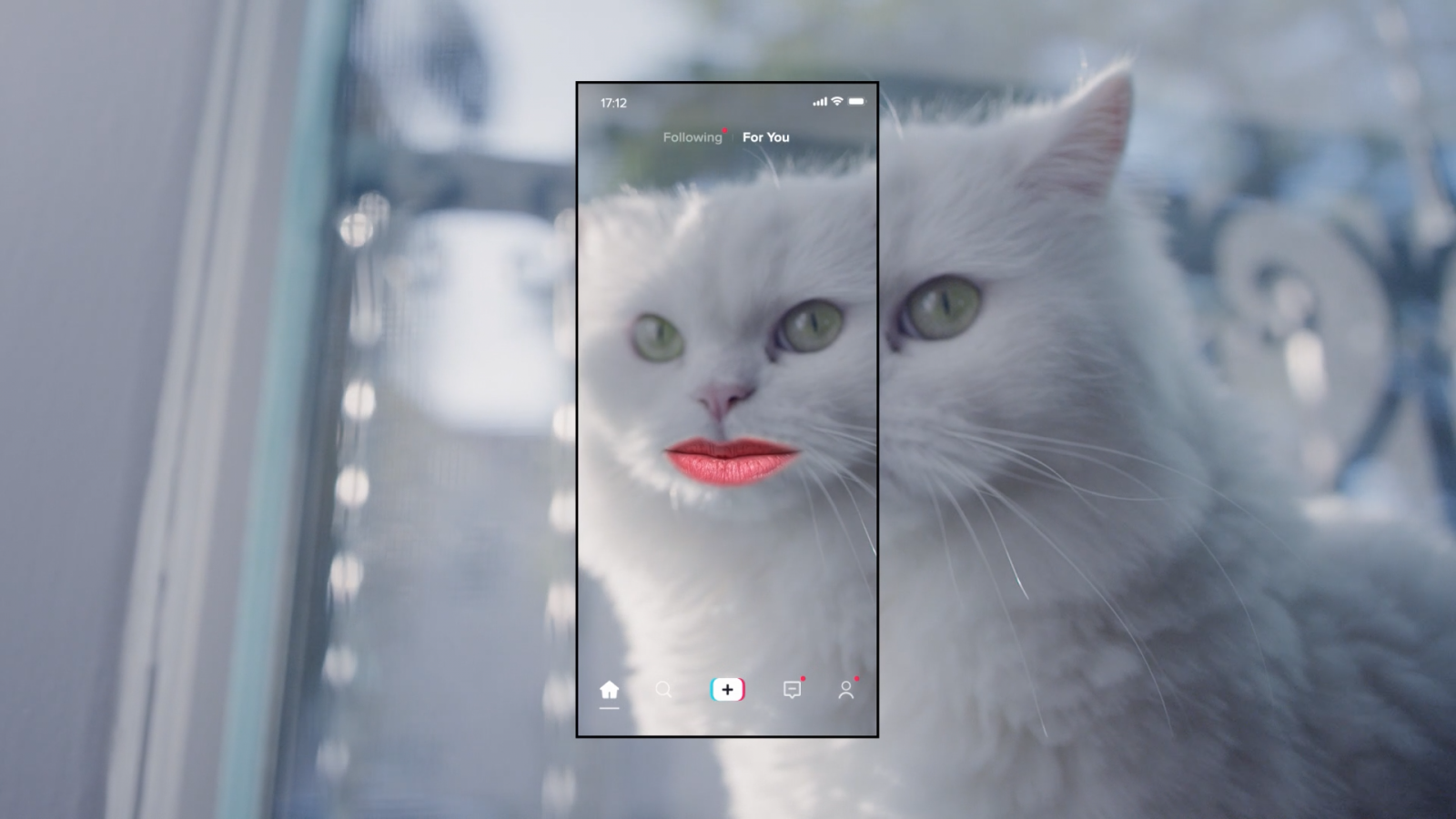

- MOCK-UP

- MODELING

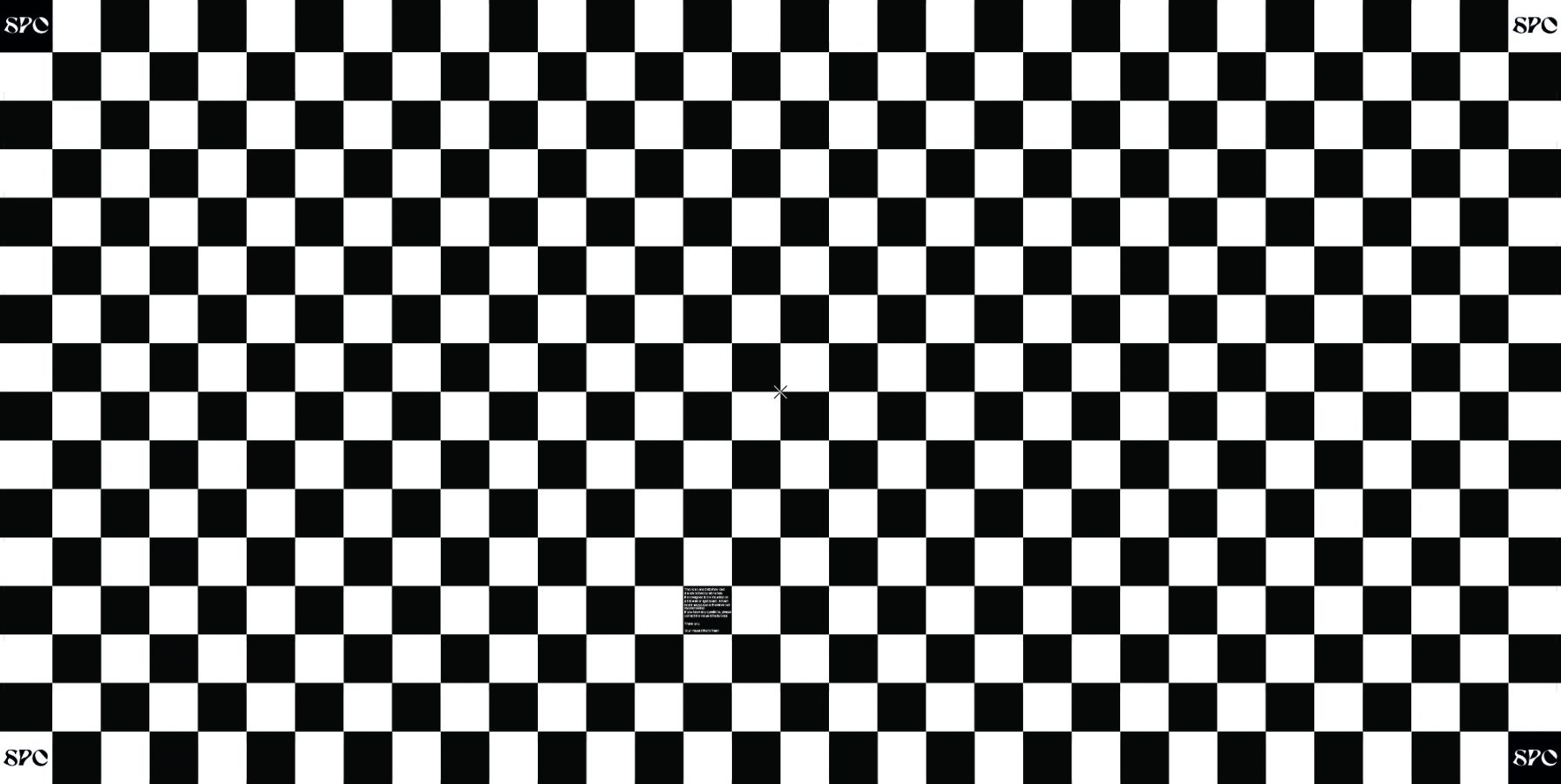

- MOIRÉ EFFECT

- MOOD

- MORPHING

- MOTION BLUR

- MOTION CAPTURE (MOCAP)

- MOTION CONTROL (MOCO)

- PACKSHOT

- PAINTING IN

- PARALLAX

- PHOTOGRAMMETRY

- PICTURE LOCK

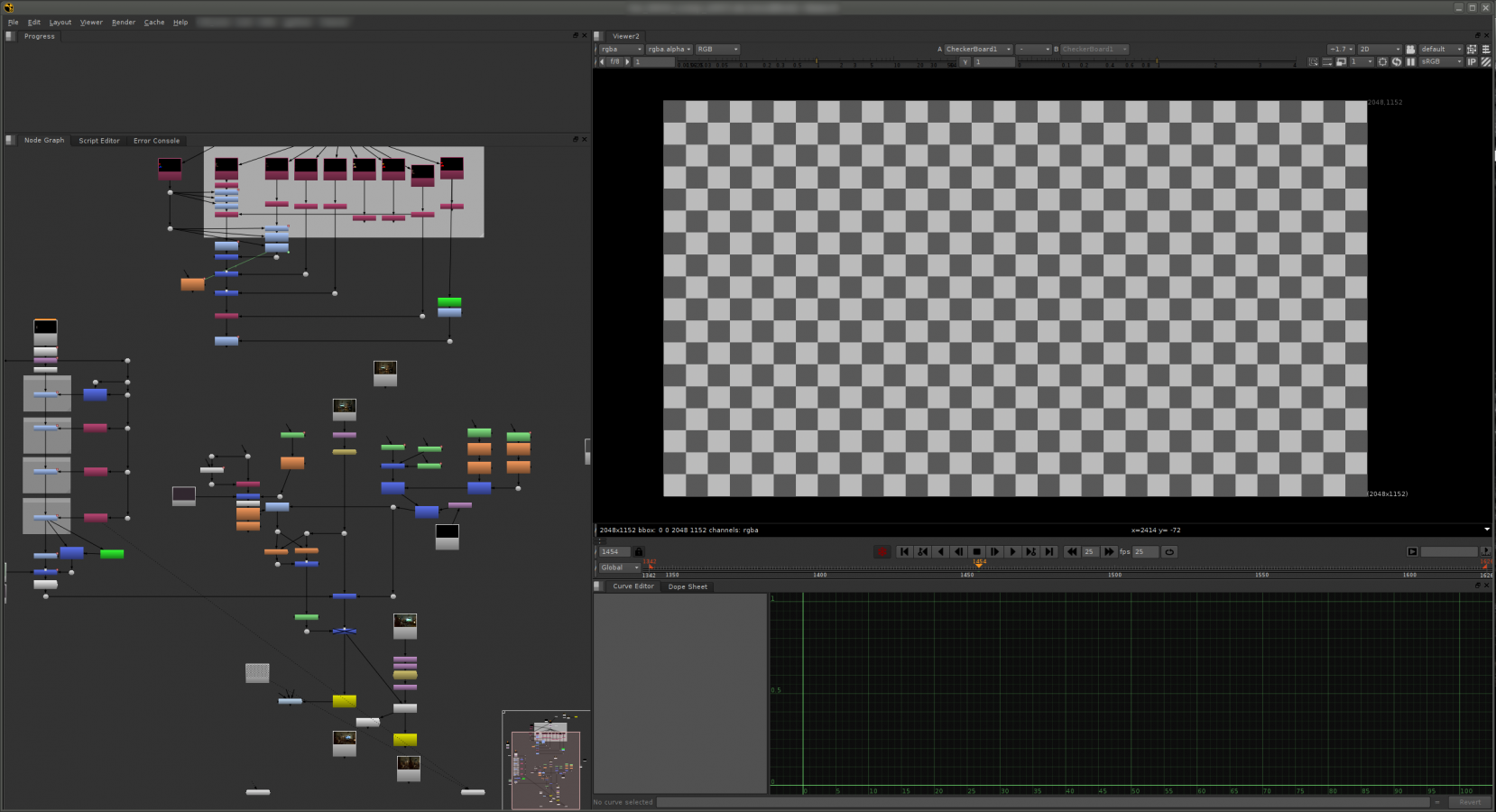

- PIPELINE

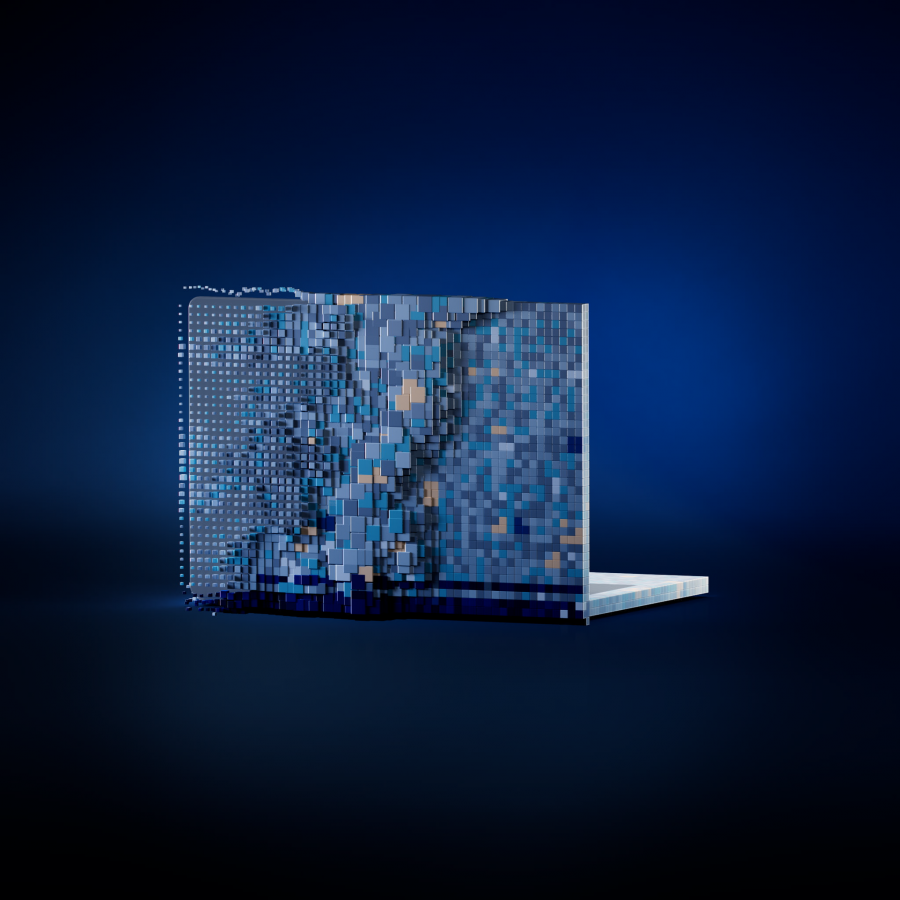

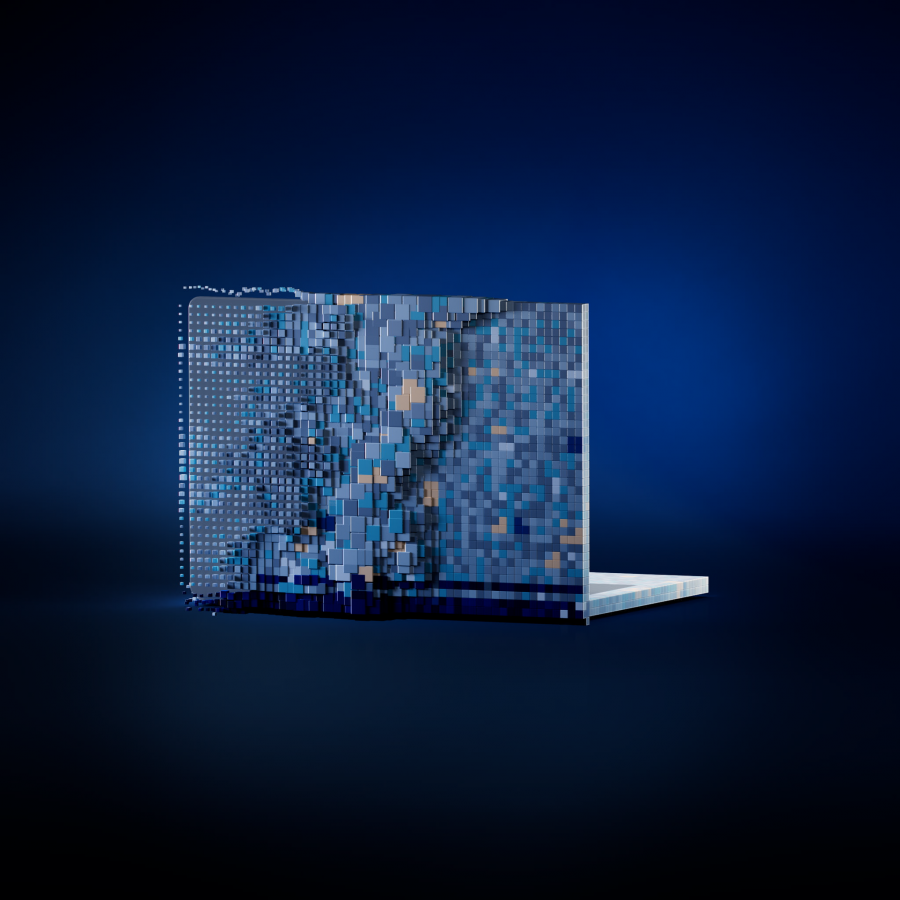

- PIXELATION

- PLATE

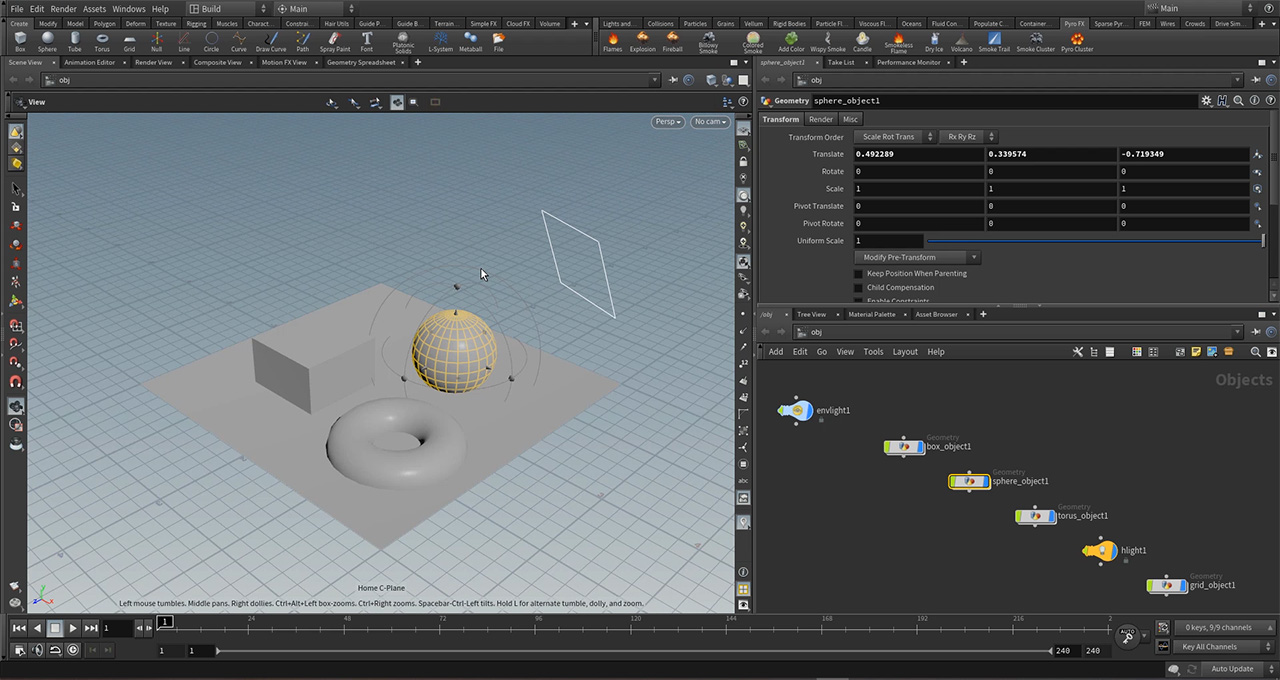

- PLAYBLAST

- PNG

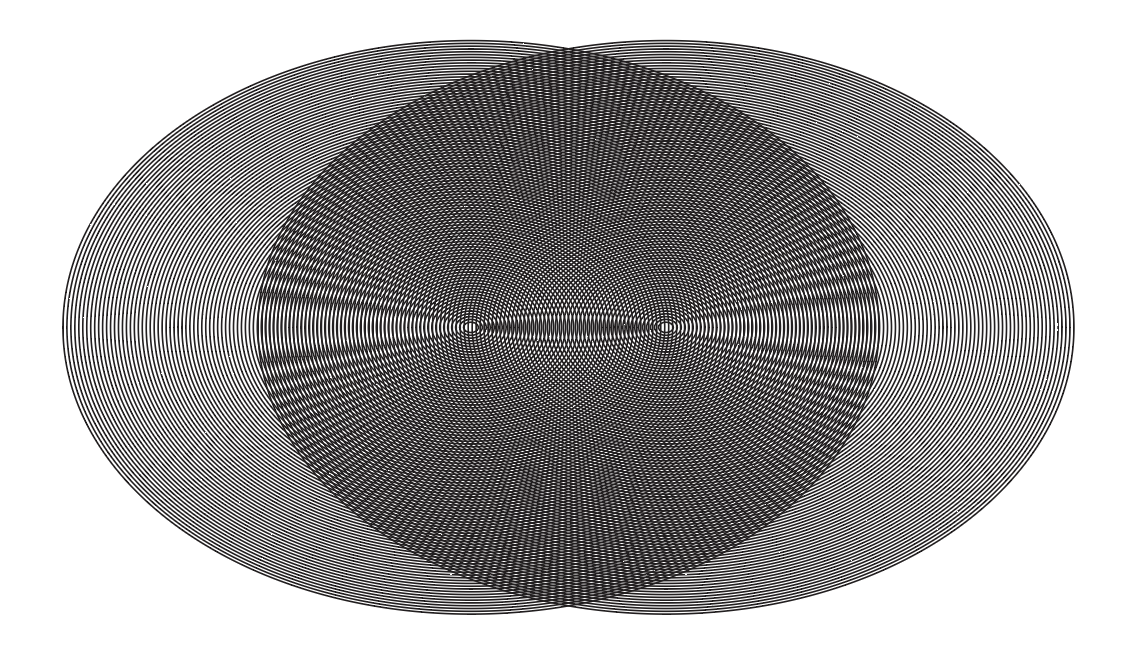

- POINT CLOUD

- POLYGON

- POSTVIS

- PREGRADING

- PREMULTIPLICATION

- PREVIS

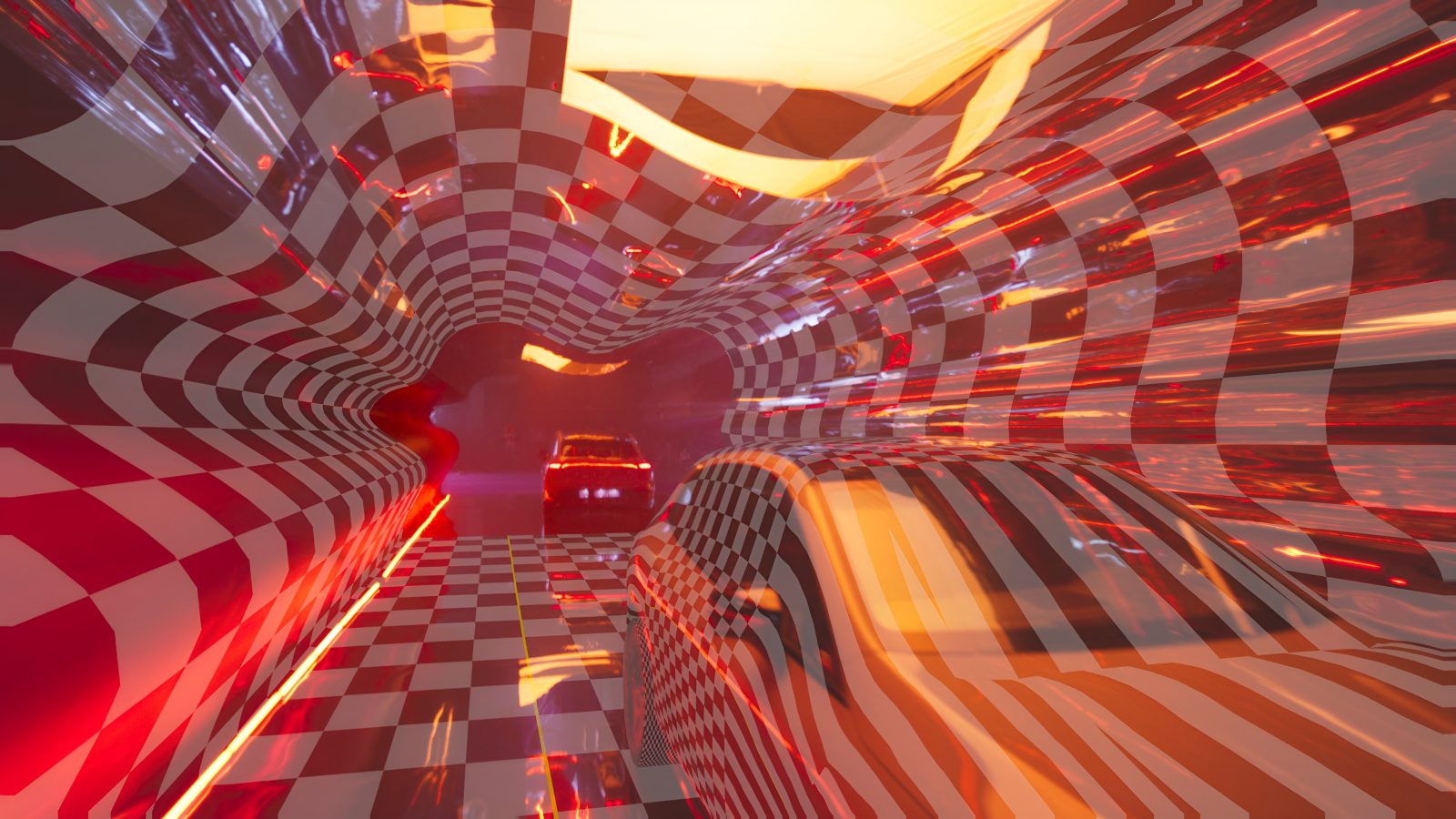

- PROJECTION MAPPING

- PROMPT

- SAMPLES

- SENSOR SIZE

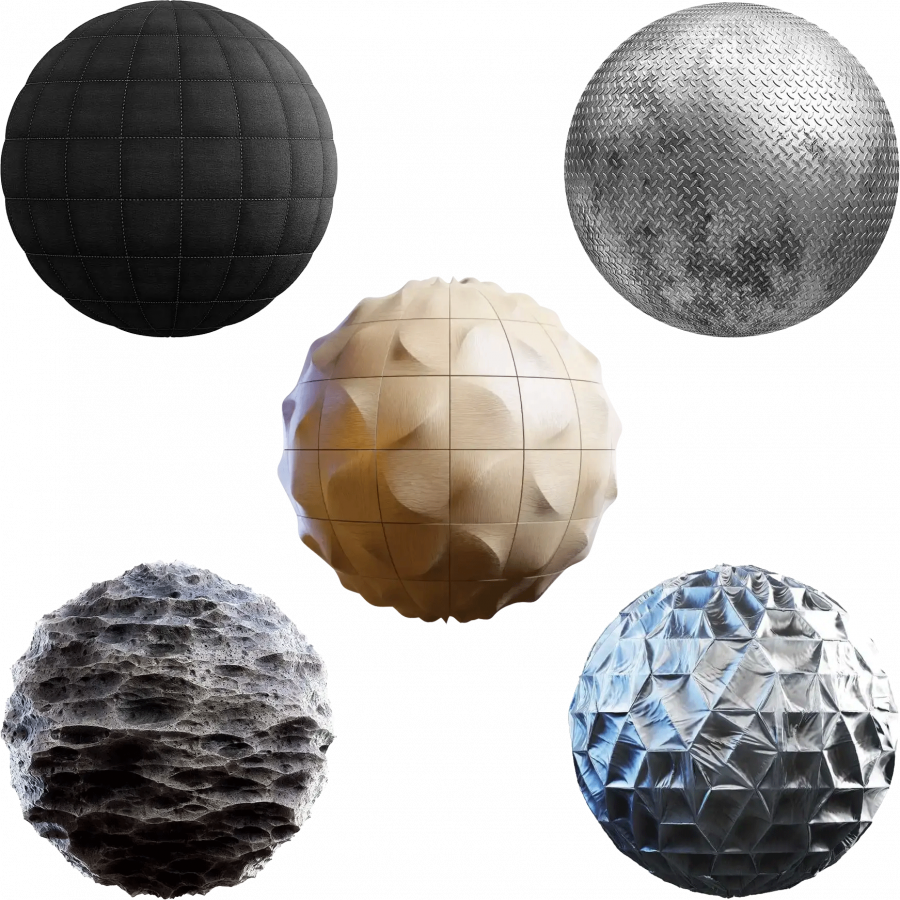

- SHADING

- SHOT-BASED

- SIMULATION

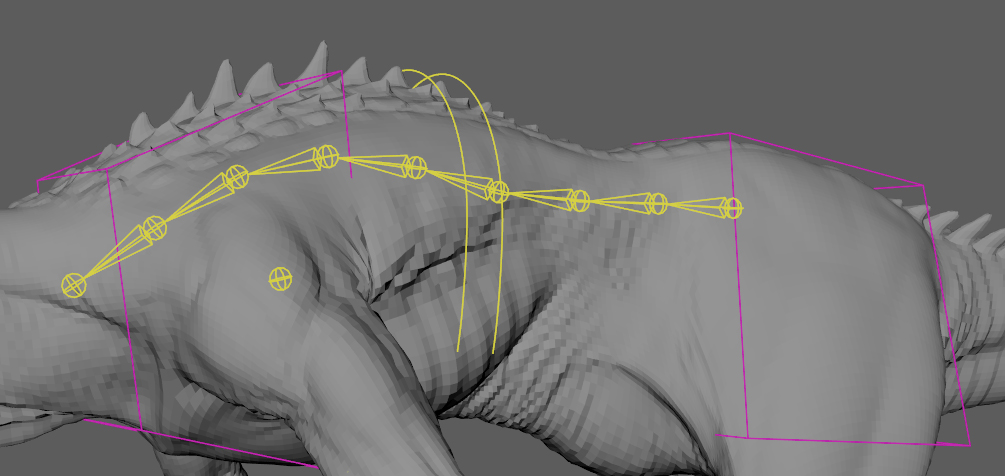

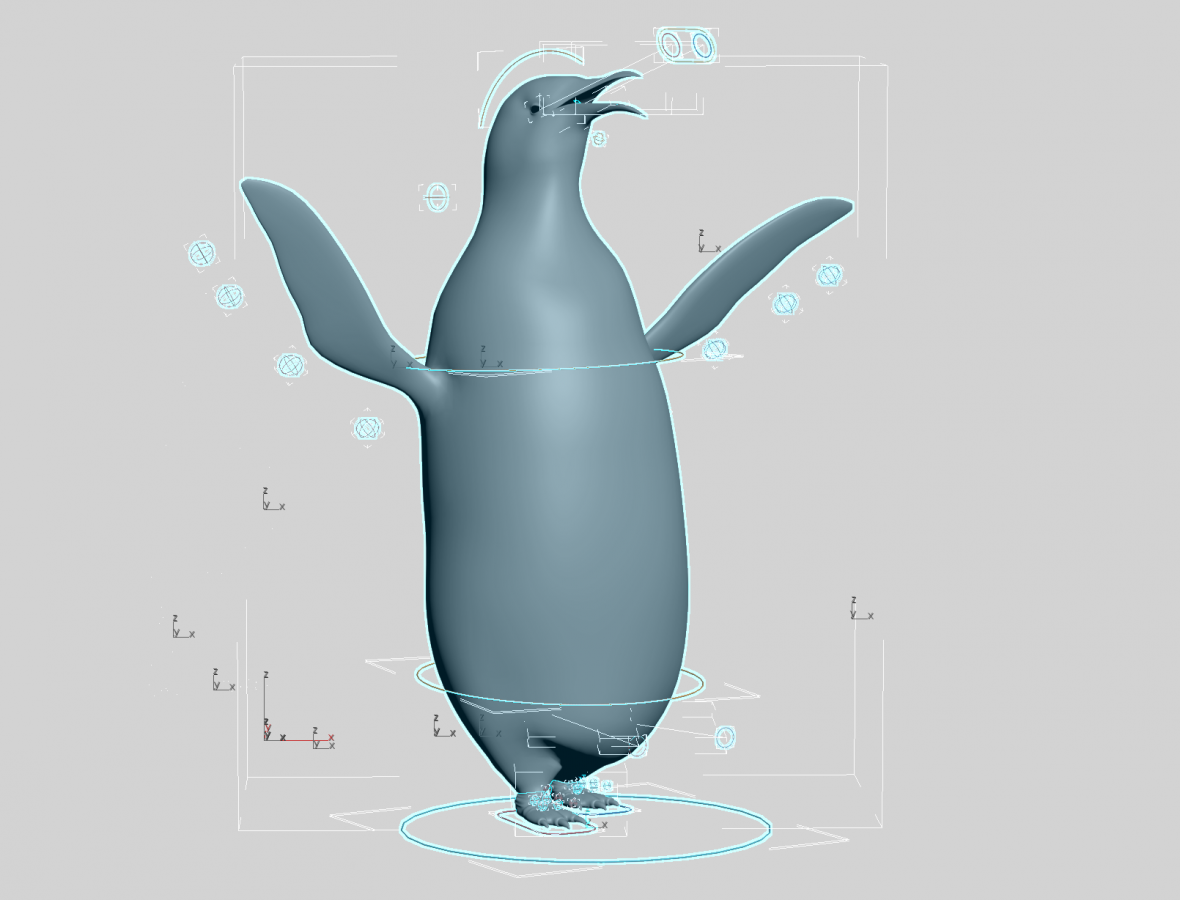

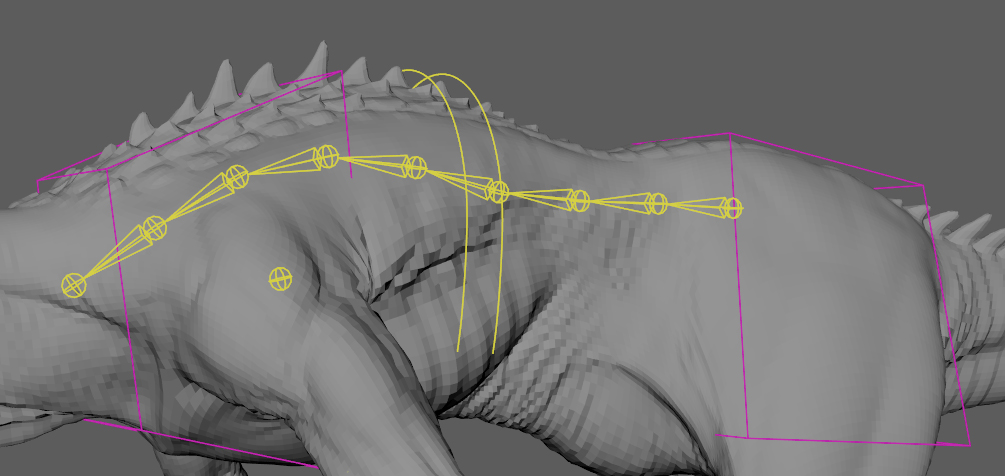

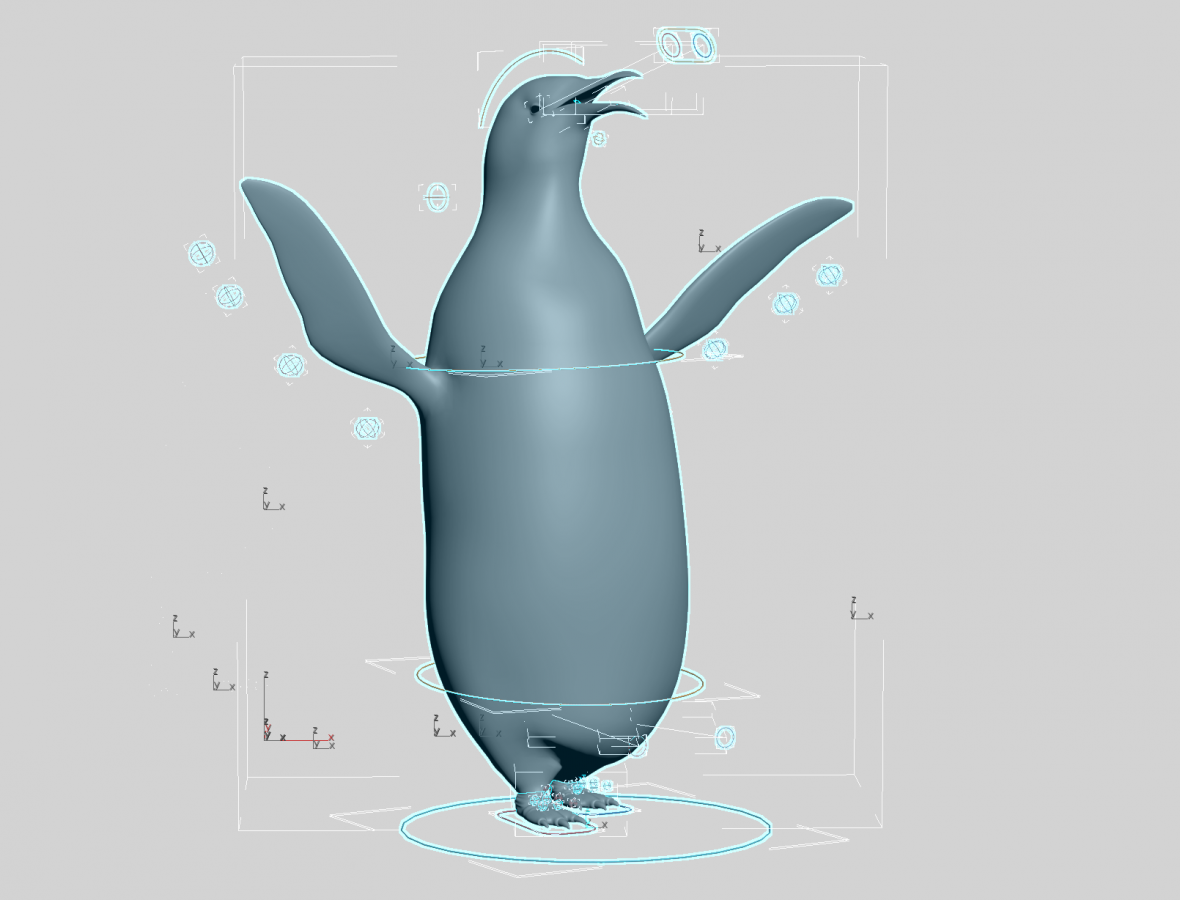

- SKINNING

- SLATE

- SPECIAL EFFECTS (S.F.X.)

- SPEED RAMP

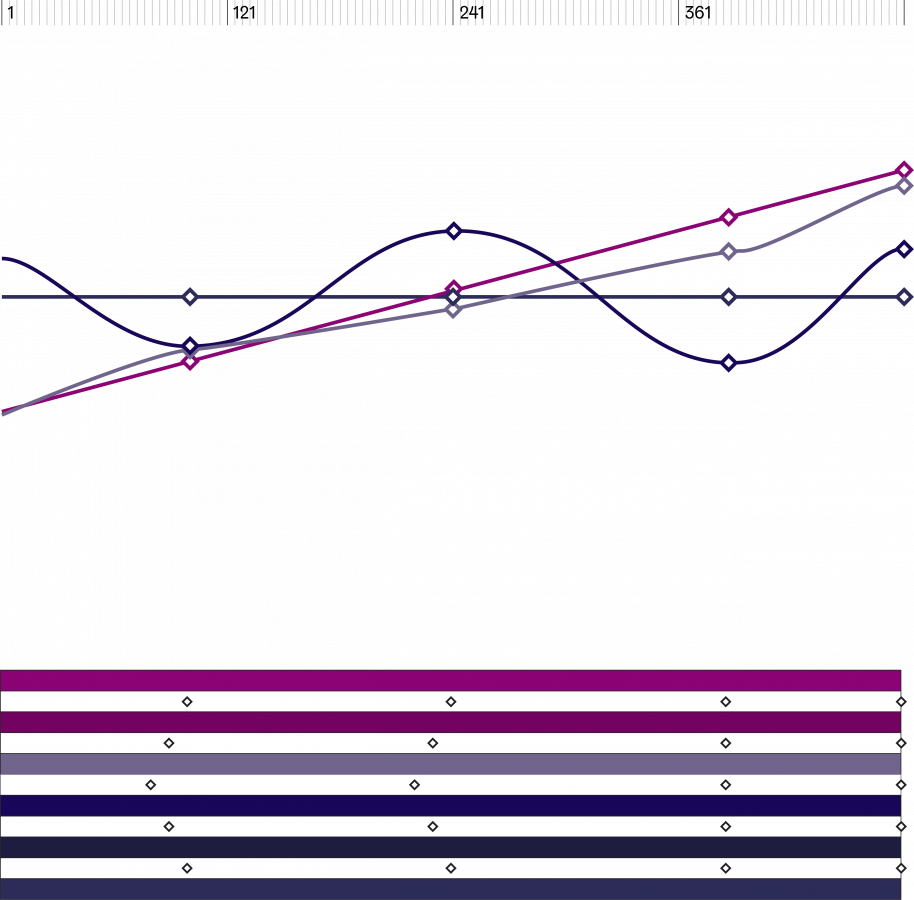

- SPLINES

- SQUEEZING

- STITCHING

- STYLEFRAME

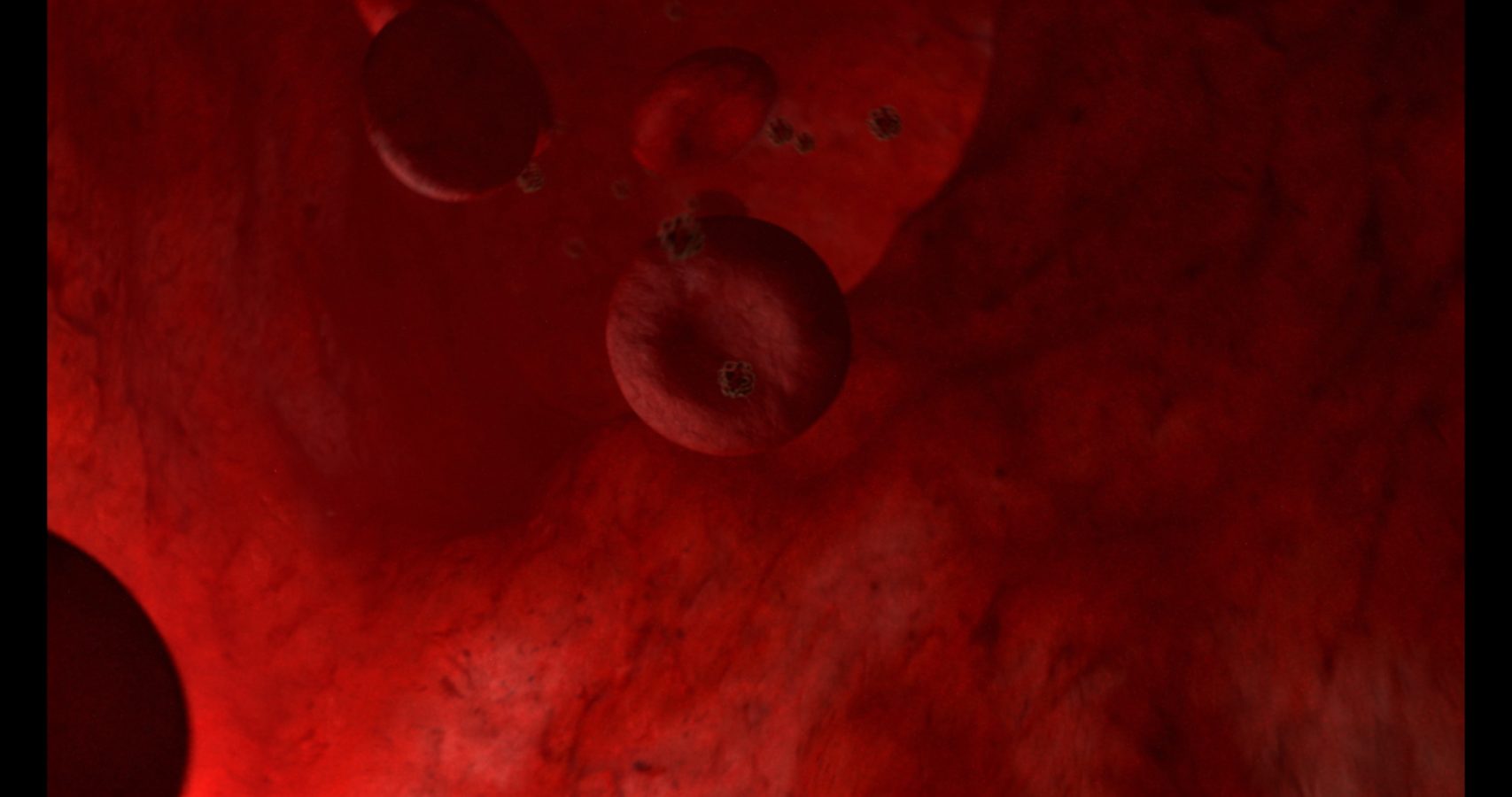

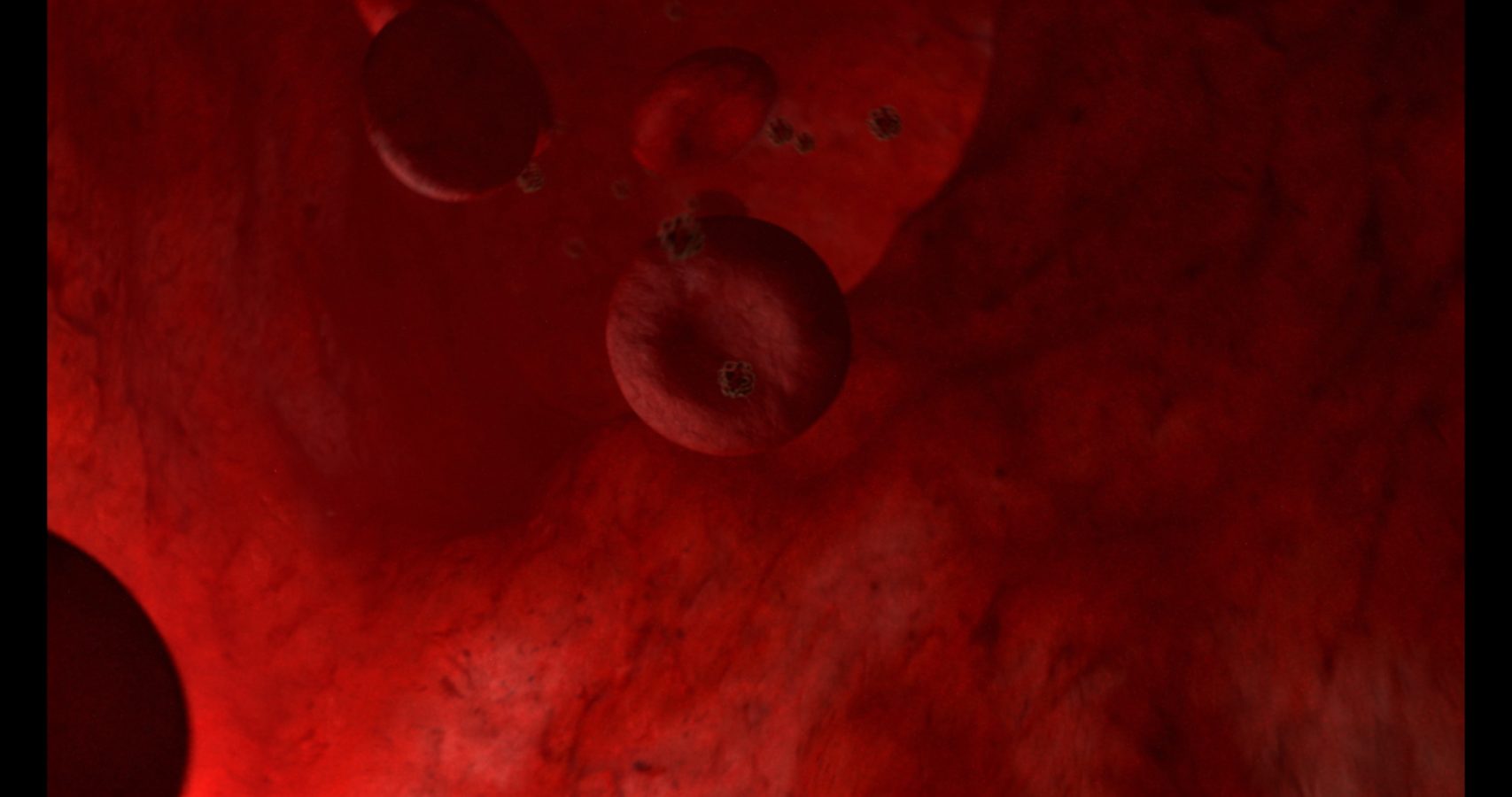

- SUBSURFACE SCATTERING (S.S.S.)

- SUPER

A

C

D

L

M

P

S

A·O·V

— /eɪ ˈəʊ ˈviː/

AOV’s, or Arbitrary Output Variables, are additional image data channels or render passes that are generated during the rendering process to enable more precise control over the final image output.AOV’s allow artists to separate different elements of a rendered image, such as diffuse color, specular highlights, reflections, or shadows, into distinct channels, which can then be adjusted or manipulated separately in post-production. This can provide greater flexibility and control over the final image and allow for easier compositing and special effects work.

A·CES

— /eɪs's/

"ACES" stands for "Academy Color Encoding System." ACES is a standardized color management system developed by the Academy of Motion Picture Arts and Sciences to ensure consistent and accurate color reproduction throughout the entire production and post-production workflow of a film or television project.

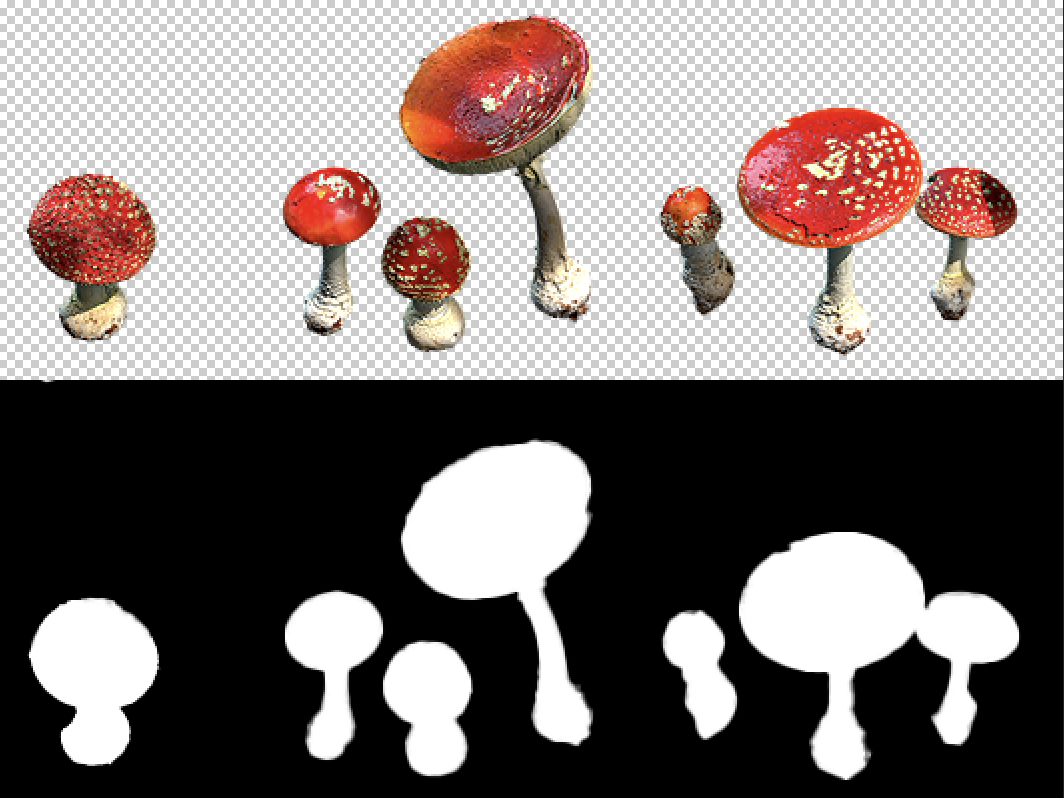

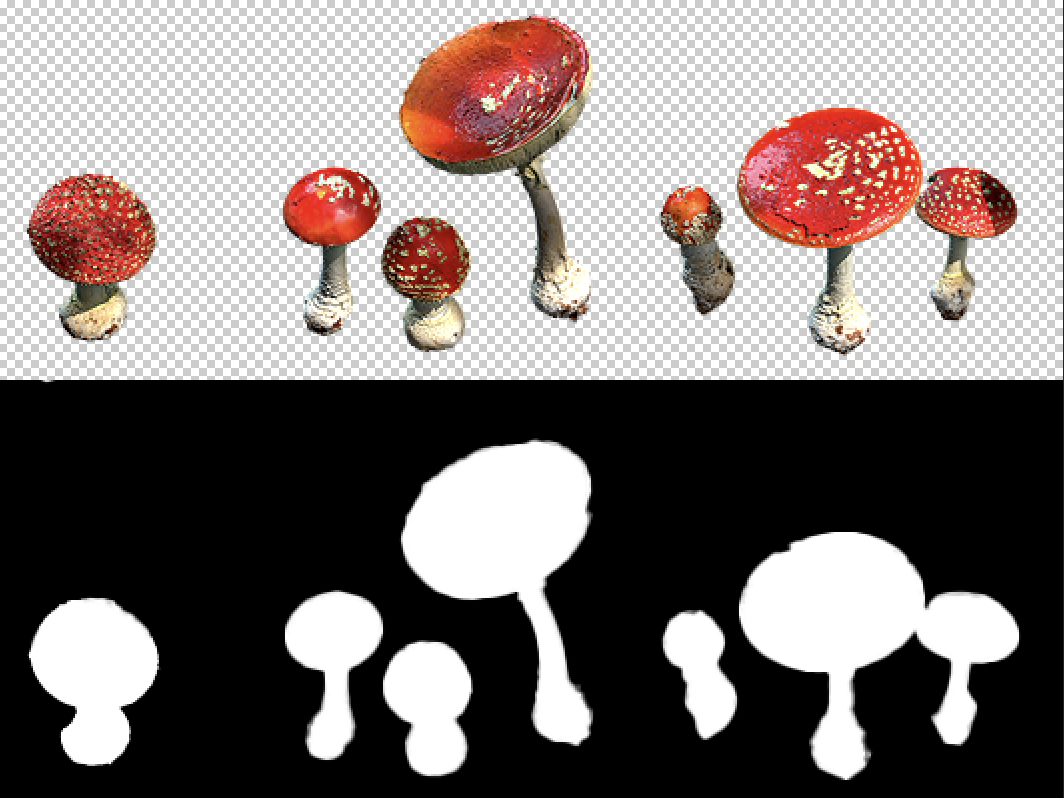

AL·PHA CHAN·NEL

— /ˈalfə ˈtʃanl/

Alpha Channel, or Alpha Matte, is an additional channel of information that accompanies a digital image or video. It represents the transparency or opacity of the pixels in the image or video. The Alpha Channel can be thought of as a separate grayscale image that is layered on top of the main image, with white representing areas of the image that are fully opaque, black representing areas that are fully transparent, and shades of gray representing varying levels of transparency. You hear this term when somebody demands an object with a transparent background.

A·NI·MA·TION

— /ˌanɪˈmeɪʃn/

Animation is a technique bringing a character or 3D model to life. It's done frame by frame, by moving different parts of the character to different positions. 2D Animation refers to the technique of creating the illusion of motion by displaying a sequence of rapidly changing static images.

AN·TI·CI·PA·TION

— /anˌtɪsɪˈpeɪʃn/

This refers to a technique used in animation and visual effects to create a sense of expectation or preparation before a significant action or movement occurs.For example, in a scene where a character is about to jump, anticipation might involve the character crouching down, bending their knees, and shifting their weight before they spring up into the air.

AP·PRO·VAL

— /əˈpruːvl/

A release that allows you to continue a specific part of your work. For example: Building something in CG is very time consuming and includes many different steps, it’s way more efficient creating a styleframe to get an idea of the final look. As soon as the styleframe is approved everything will be build in 3D.

AR·TI·FACT

— /ˈɑːtɪfakt/

An artifact is an undesired element in an image or a video that needs to be removed from as it has a negative effect on the quality of visual content. Artifacts often appear while shooting live-action footage as a side effect of weather conditions (e.g. damage to the camera lens, or when saving a file goes wrong). They can also appear during the rendering process. Artifacts can be used for creative purposes too.

AR·TI·FI·CI·AL IN·TEL·LI·GENCE [A.I.]

— /ˌɑːtɪfɪʃl ɪnˈtɛlɪdʒ(ə)ns/

AI refers to the simulation of human intelligence processes by computer systems. In the context of VFX, AI can nowadays be used to generate different kind of styles, supporting rotoscoping and cleanup processes, or simplifying a variety of production steps. This book was written with the help of AI too.

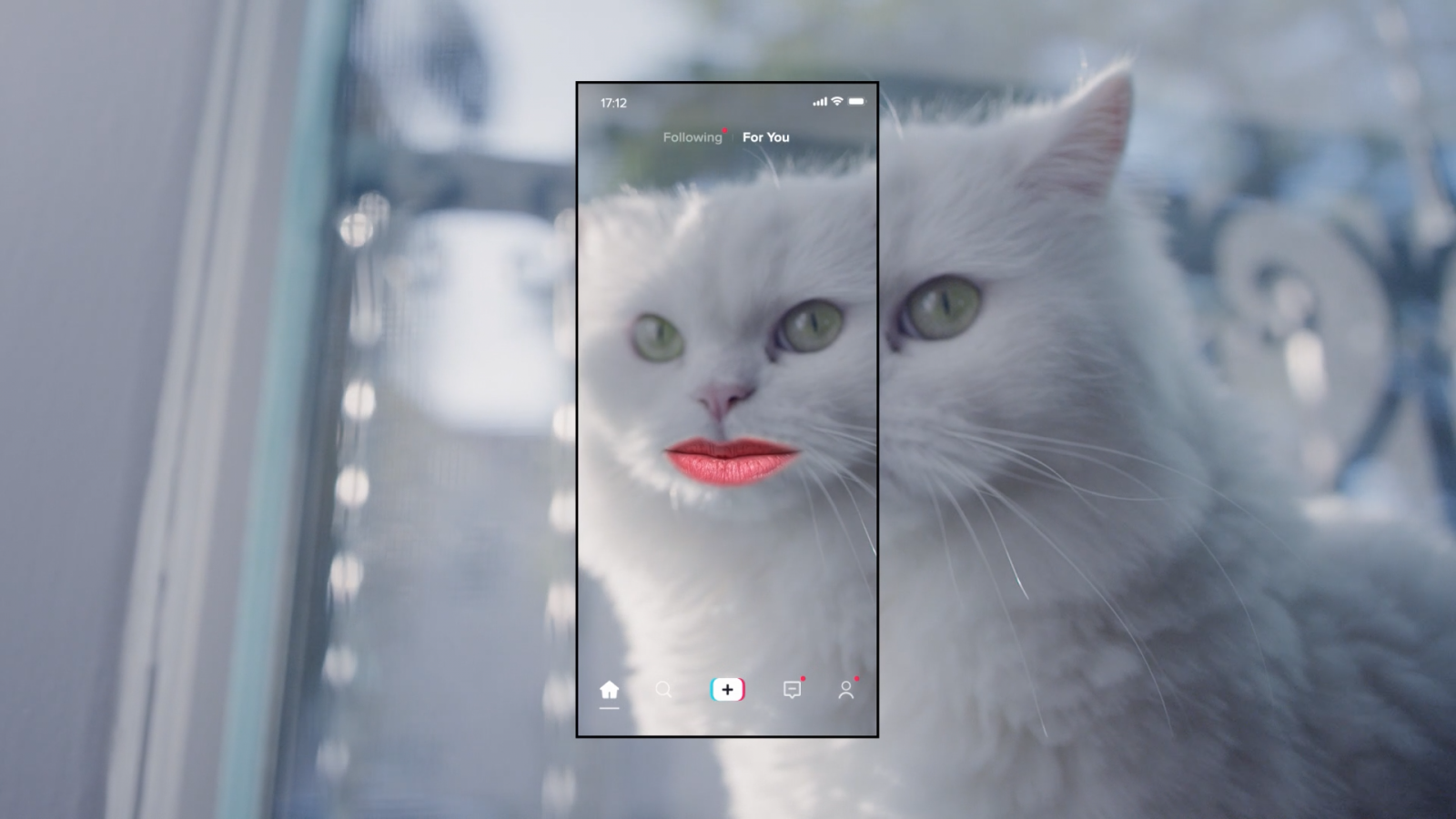

AS·PECT RA·TI·O

— /ˈaspɛkt ˈreɪʃɪəʊ/

The proportional relationship between the width and height of a video or film frame. It is expressed as two numbers separated by a colon, such as 16:9 or 4:3.The first number in the aspect ratio represents the width of the frame, while the second number represents the height. For example, an aspect ratio of 16:9 means that the width of the frame is 16 units and the height is 9 units.

Some common aspect ratios used in video and film production include:

- 16:9 (widescreen): This aspect ratio is commonly used in modern television and video production. It is wider than the traditional 4:3 aspect ratio, providing a more immersive viewing experience.

- 4:3 (standard): This aspect ratio was commonly used in older television and film production. It is more square-shaped than the 16:9 aspect ratio, and may be used for a nostalgic or retro effect.

- 2.39:1 (CinemaScope): This aspect ratio is commonly used in feature films to provide a wide, panoramic view. It is wider than the 16:9 aspect ratio, and is often used for epic or visually stunning productions.

Nowadays more commonly used:

- 9:16 (phone): This is used for Instagram stories, reels and TikTok videos.

- 1:1 (square): Made popular by Instagram and is still used often for this purpose.

- 4:5 (high rectangle): The maximum height for a post in an Instagram carousel.

AS·SET

— /ˈasɛt/

A collective term for all kinds of elements used during a production. Usually it’s used for 3D Models and could be anything like a plane, a tree or even a box. It can also be used for matte paintings, stock footage, 2D Graphics or even brushes.

AUG·MENT·ED RE·AL·I·TY [A.R.]

— /ɔːɡˈmɛntɪd rɪˈalɪti/

AR refers to the integration of digital content into the real world, creating an interactive experience using smartphones, tablets, or other devices equipped with cameras and sensors. AR can be used in VFX to enhance the real-world environment or to create virtual objects that are integrated into real-time application.

BA·KING

— /ˈbeɪkɪŋ/

This term refers to a process of pre-calculating or pre-rendering certain elements of a scene or animation and then saving the results to a file for later use. Baking is used to optimize rendering times and improve the overall efficiency of the VFX workflow.

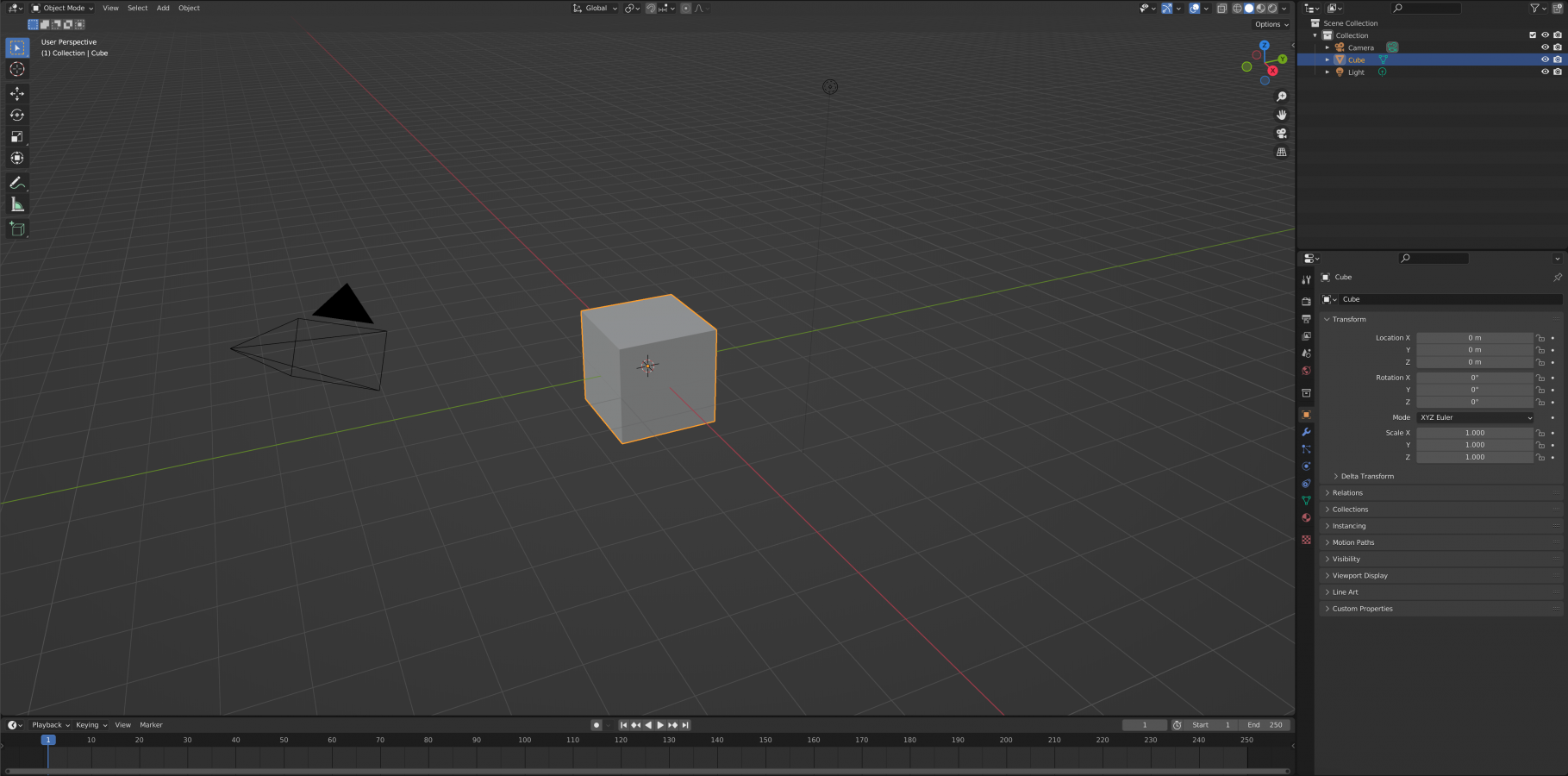

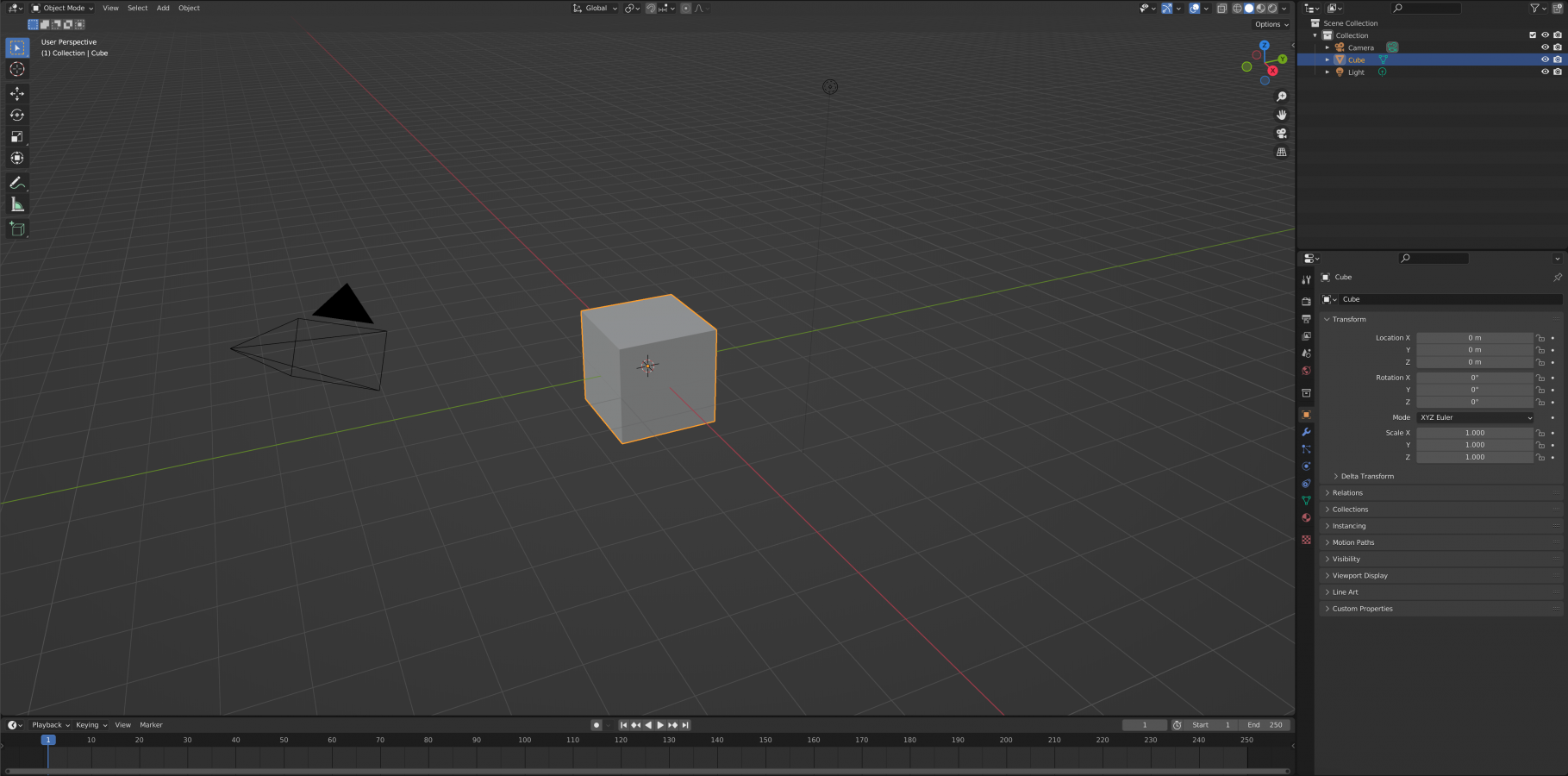

BLEN·DER

— /ˈˈblɛndə/

Blender is a free and open-source software application from Blender Foundation that allows you to perform a great variety of creative tasks in one place. In Blender, you can perform 3D modeling, texturing, rigging, particle simulation, animating, match moving, rendering, and more.

BLOCK·ING

— /ˈblɒkɪŋ/

In VFX, blocking refers to the process in which a pre-visualization or rough layout is created to plan and stage the action and camera movements in a scene or sequence. This helps the filmmakers to visualize the final outcome and make necessary adjustments before the actual shoot or animation.

BONES

— /bəʊns/

In character animation, bones are the digital or virtual joints which are used to pose and control the movement of the 3D or 2D character's parts, such as arms, legs, torso, and head.

C·G·I

— /siː dʒiː aɪ/

Computer-generated imagery. As the name suggests, these are the visual elements of your production that are created on a computer. Often used to refer specifically to 3D Computer Animation or as another term for Visual Effects or VFX. CGI and VFX are not the same though. CGI – and its integration – can be considered part of the VFX process.

CHAT G·P·T

— /tʃat dʒɪ'pi'tiː/

Chat GPT refers to a conversational model that uses the GPT (Generative Pre-trained Transformer) algorithm. It is an advanced artificial intelligence technology that allows machines to interact and communicate with humans in a natural language format. In simpler terms, Chat GPT is a chatbot or a virtual assistant that is capable of understanding natural language and responding appropriately. For example, the base of all descriptions in our ABC of VFX was written by Chat GPT.

CHRO·MA·KEY

— /ˈkrəʊməˌkiː/

Chroma key refers to a technique used to separate a part of an image from the rest of the content by using a specific color. This color (usually green or blue) can be replaced by different content.

CHROME BALL / GREY BALL

— /krəʊm bɔːl/ — /ɡreɪ bɔːl/

A chrome ball, or mirrored ball, is a sphere that is perfectly round and reflective of all the surrounding light. It is used for visual effects to capture lighting information from set. Watching the chrome ball tells you where the light is positioned on set. A grey ball, or neutral reference ball, is used as a reference tool for lighting and color in a scene. After the shot is captured, VFX artists can use the grey ball to help ensure that the lighting and color of the scene are consistent and accurate. By analyzing the reflection of light on the grey ball, VFX artists can determine the direction, intensity, and color of the light sources within the scene.

CLAY REN·DER

— /kleɪ ˈrɛndə/

It is a 3D computer graphics term that refers to the rendering of an object or scene without any shading or texturing applied to it. It is called a "clay" render because the object appears as if it were made of clay, with no surface details visible.

CLEAN·UP

— /ˈkliːnʌp/

It is a post-production term that refers to the process of removing unwanted elements from a shot or footage. It includes removing wires, rigging, tracking markers, or other elements that were visible during the shooting process but not intended to be in the final shot.

CO·LOR CHART

— /ˈkʌlə tʃɑːt/

It is a reference tool used in photography and videography to ensure accurate color representation. It consists of a standardized set of colors that are printed on a chart, making it easy for filmmakers and photographers to calibrate their equipment and ensure that the colors they capture are true to life. By comparing the colors in the image to those on the color chart, filmmakers can adjust their settings and ensure that the final product looks as intended.

COM·PO·SIT·ING

— /ˈkɒmpəzɪtɪŋ/

The combination of at least two source images to create a new integrated image. Compositing happens when you put all your different ‘elements’ together – your 3D assets, your backgrounds, your particle effects, and your actual on-set footage. It also makes sure all of the elements are combined in the right way to end up with an harmonious picture.

COR·PO·RATE I·DEN·TI·TY [C.I.]

— /ˈkɔːp(ə)rət ʌɪˈdɛntɪti/

The visual representation of a brand or company which includes its logo, color palette, typography, and other design elements that create a distinctive and cohesive image for the company.

CROP·PING

— /krɒpiŋ/

Cropping means to cut certain areas of the frame to fit into a specific format. For example: You have to crop when you have a wide rectangular image and need to make it square.

CUT·DOWN

— /ˈkʌtˌdaʊn/

A shorter version of a longer video or film that is created by cutting out the unnecessary or less important content to make it more concise and effective for a specific purpose, such as advertising or social media.

DAI·LIES

— /ˈdeɪlis/

This term refers to a stage of a shot at the end of a day. VFX Artists render the current status so production can make sure everything is on track. It can be seen as a work-in-progress version of a task at the end of a working day.

DA·TA·MOSH·ING

— /ˈdeɪtə'mɒʃɪŋ/

An intentional glitch effect that occurs during compression or editing of video data. It usually results in the distortion of pixels and colours to create unique and striking visual effects.

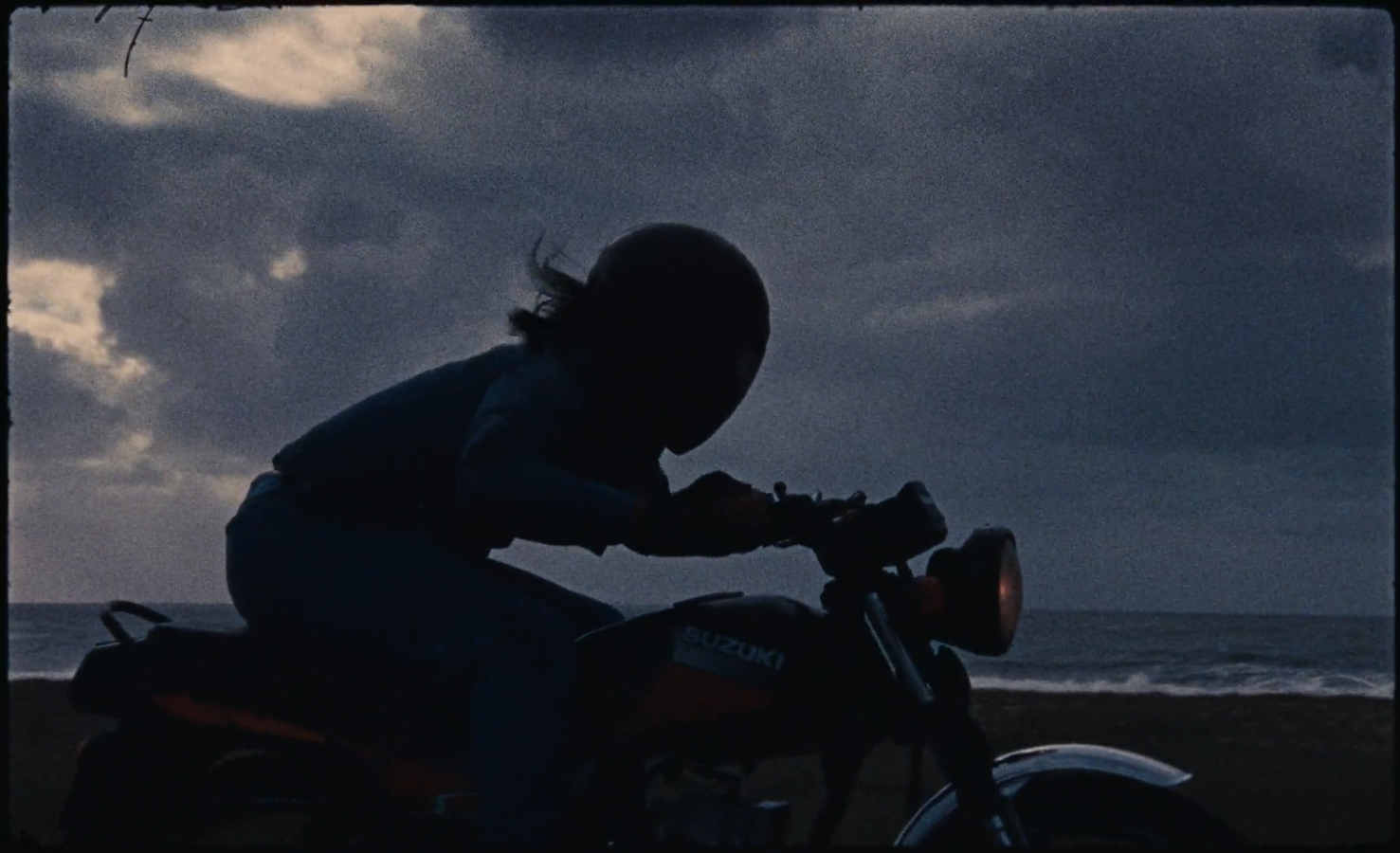

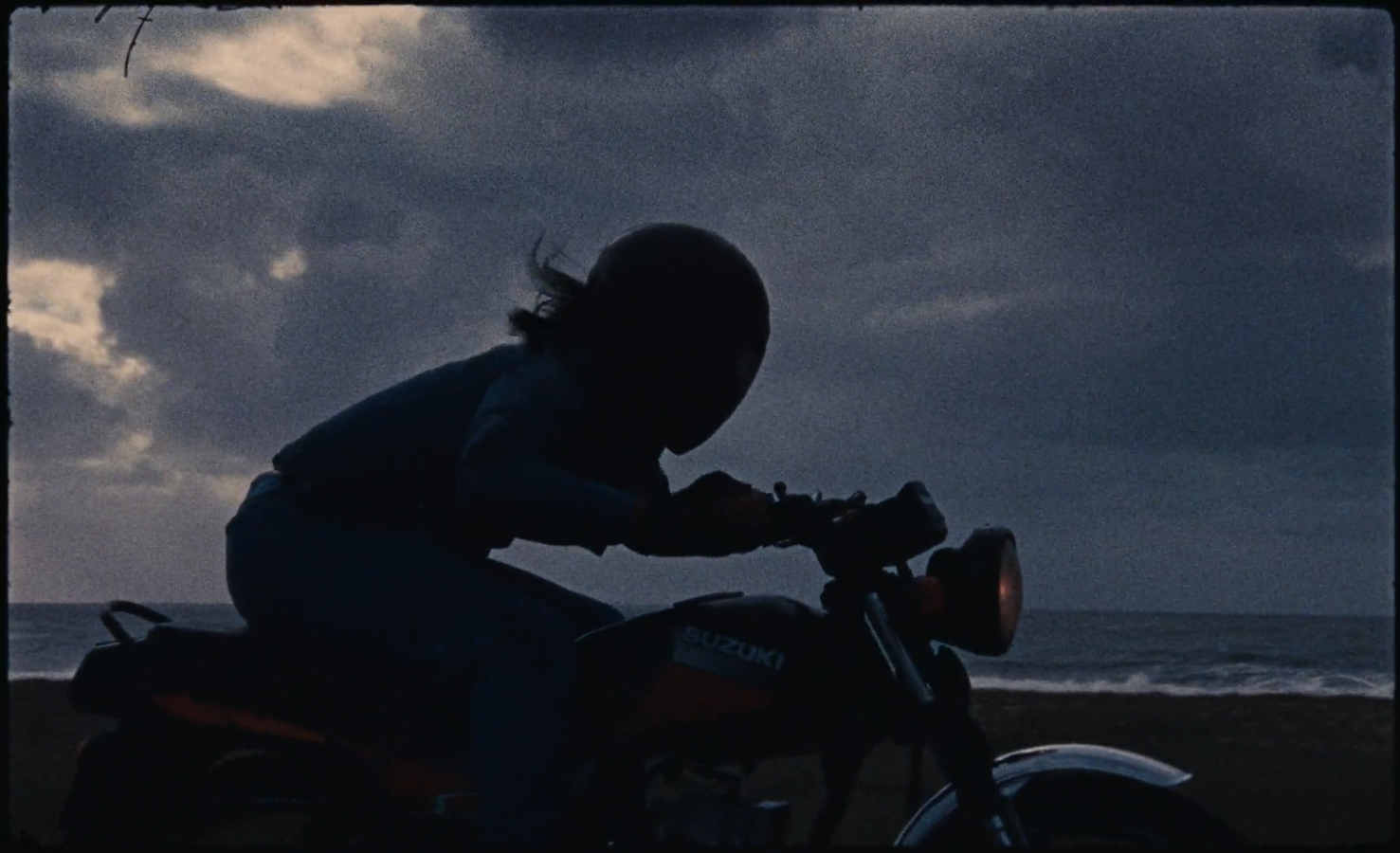

DAY FOR NIGHT

— /deɪ fɚ nʌɪt/

This is a grading technique where footage shot during the day is manipulated in post-production to appear as if it was filmed at night. This is often done to save time, costs or to get a specific look or effect.

DEEP COM·PO·SIT·ING

— /diːp ˈkɒmpəzɪtɪŋ/

This refers to a technique that allows controlling the compositing process in a more detailed way. It’s possible to do even more changes compared to traditional compositing but leads to a large amount of additional data.

DE·FOR·MER

— /dɪˈfɔːmə/

This is a tool or effect that can be used in animation and modelling to manipulate the shape or geometry of an object or character.

DE·LI·VE·RY

— /dɪˈlɪv(ə)ri/

This term refers to the final output of a project or sequence, often requiring specific formats or standards for distribution or exhibition.

DIR·TY 3·D

— /ˈdəːti θriː'diː/

This refers to 3D animation or modelling techniques that use low-quality or unconventional methods in order to create a specific style or look.

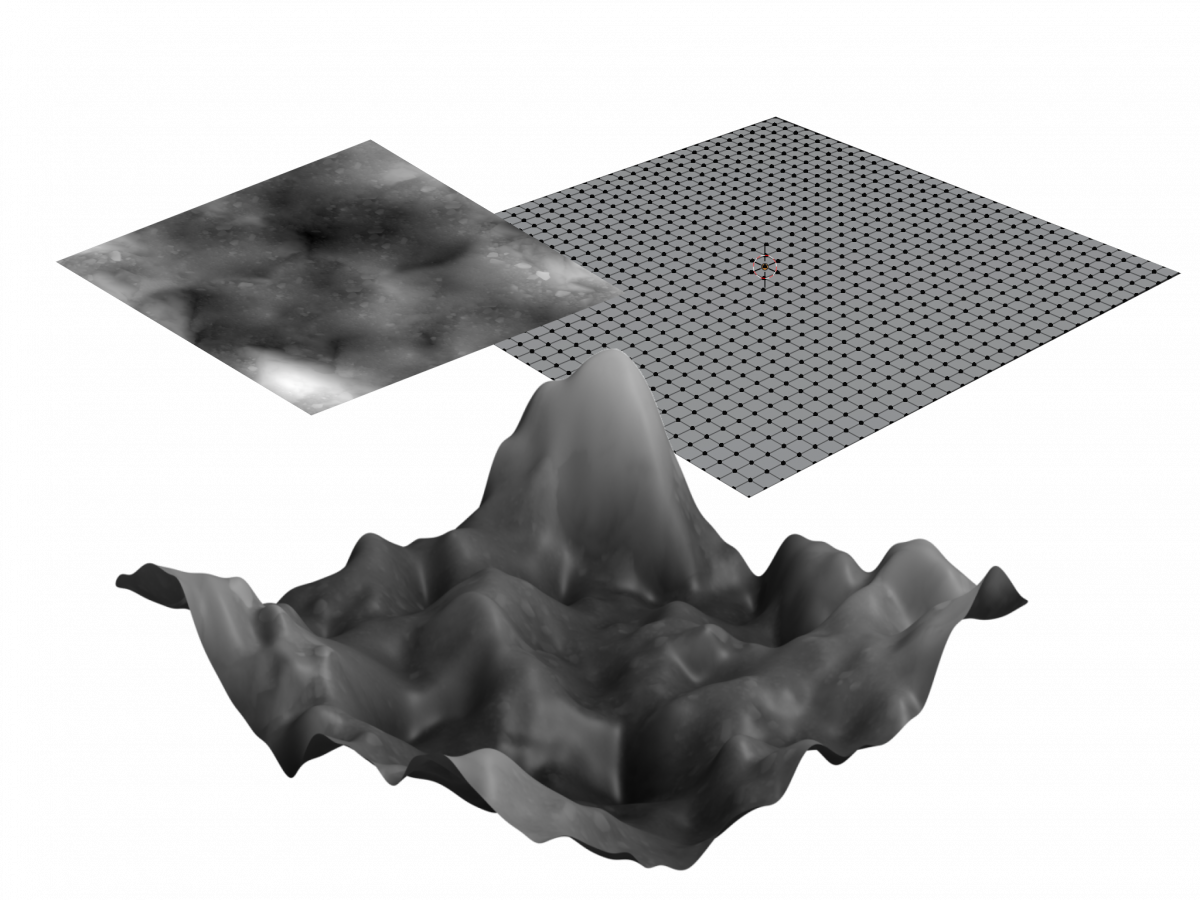

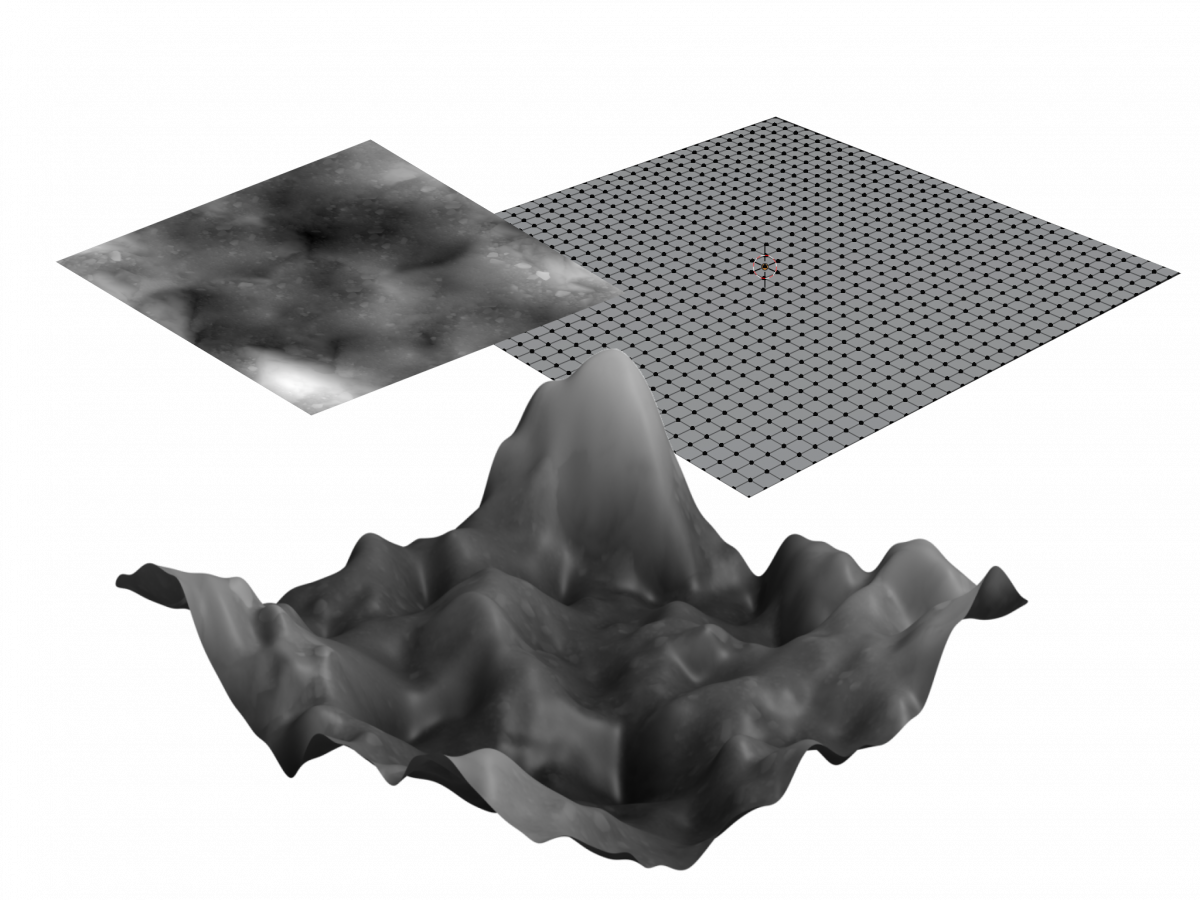

DIS·PLACE·MENT

— /dɪsˈpleɪsm(ə)nt/

Displacement allows artists to create realistic and detailed surface textures, as well as simulate natural phenomena such as waves or ripples. This technique is used to deform the geometry of 3D models, and works by using a grayscale image (known as a "displacement map") to modify the surface of a 3D model. The brightness values in the displacement map correspond to the amount of displacement applied to the model, with lighter areas being displaced more than darker areas.

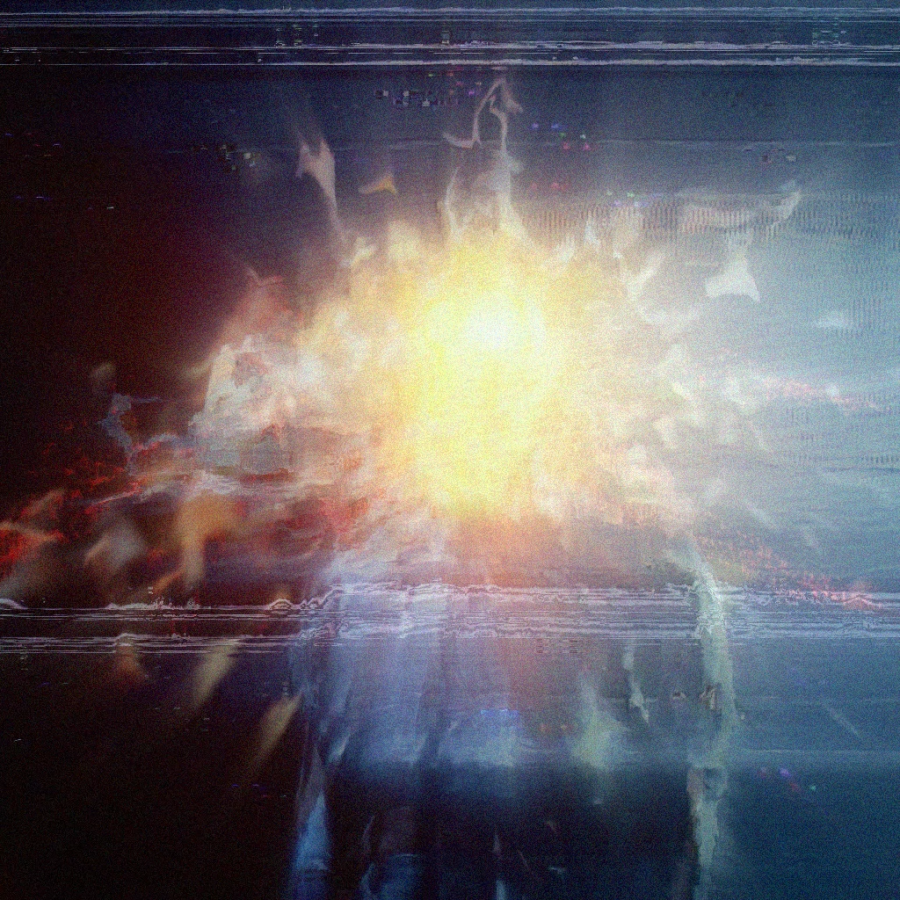

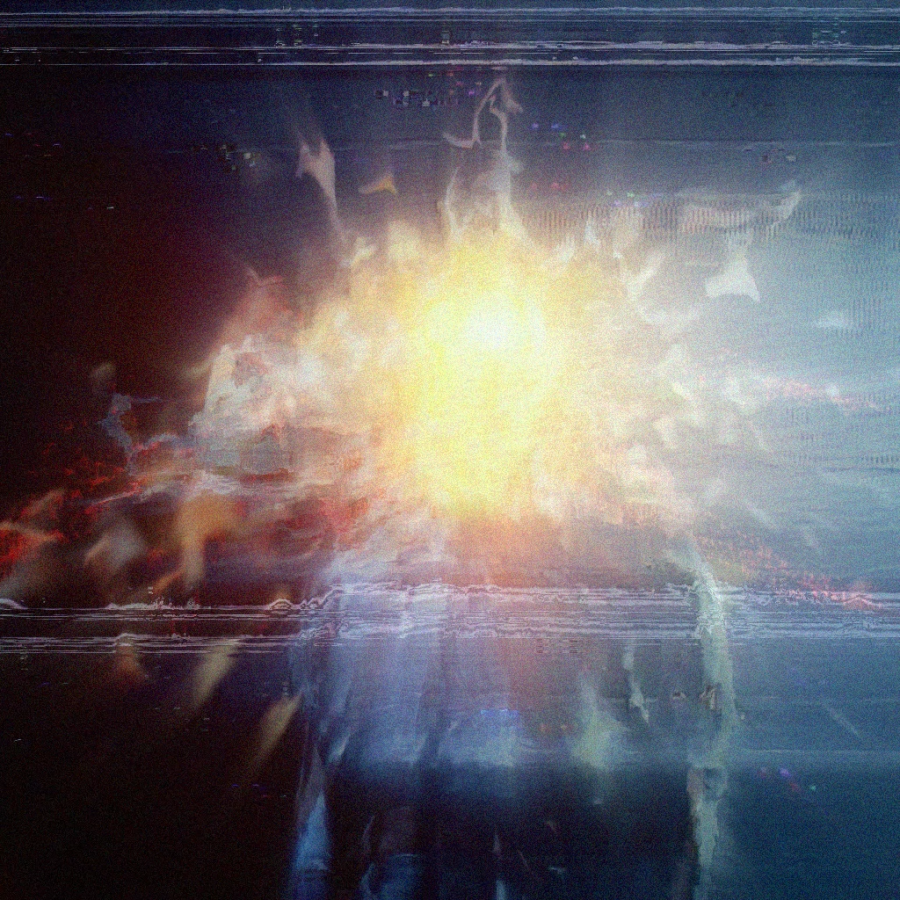

DY·NA·MIC F·X

— /dʌɪˈnamɪk ˌɛf'ɛks/

Dynamic FX is a broad category of VFX that encompasses a wide range of techniques and tools used to create realistic and visually striking simulations of physical phenomena, such as fire, smoke, water, and explosions.

DY·NA·MIC RANGE

— /dʌɪˈnamɪk reɪn(d)ʒ/

The range of tones or luminance levels between the darkest to the brightest parts of an image or footage, determining the level of detail and depth of the visual information.

E·T·A

— /i:ti eɪ/

ETA stands for "Estimated Time of Arrival." It is a term commonly used to indicate the expected or predicted time when someone or something is expected to arrive at a specific location or destination. ETA is often used in various contexts, for example when someone asks what time they can expect for a file to be delivered.

ED·IT DE·CI·SION LIST [E.D.L.]

— /ˈɛdɪt dɪˈsɪʒn lɪst/

A list or file that contains information about the sequence, timing, and details of individual shots used in an edited video, which is used by the post-production team to conform the final timeline accurately.

END OF BU·SI·NESS [E.O.B.]

— /ɛnd ɒv ˈbɪznɪs/

A term used to denote the end of a working day or business hours in a company or organization. Usually between 6 PM and 8 PM.

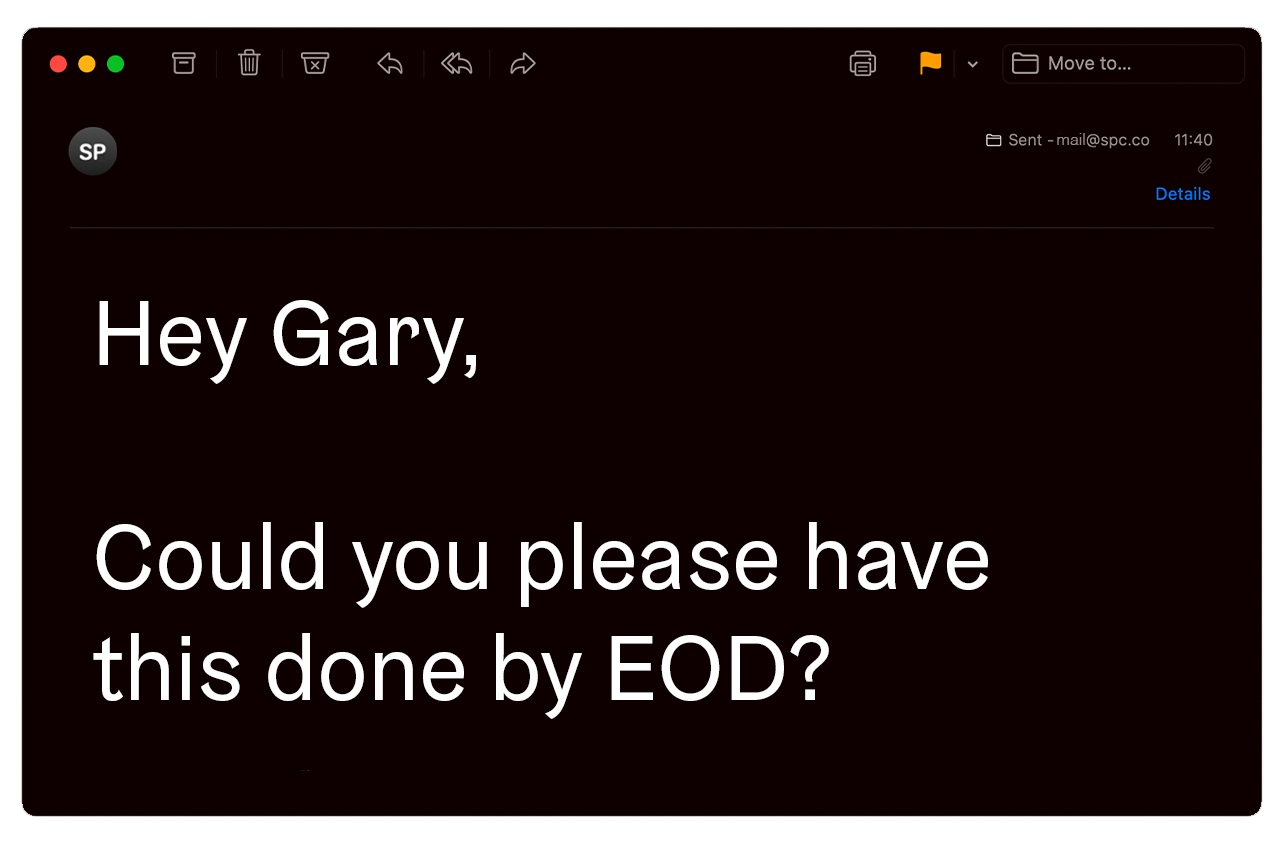

END OF DAY [E.O.D.]

— /ɛnd ɒv deɪ/

A term used to denote the end of the day, which is at midnight. Often used if something needs to be done at least before the next day.

EN·VI·RON·MENT

— /ɛnˈvʌɪrə(n)m(ə)nt/

An environment refers to the digital creation of a setting or location within a movie or television show. This includes everything from the scenery to the lighting and weather effects. VFX artists use a range of techniques such as CGI, 3D modeling, and compositing to create realistic environments that seamlessly blend with live-action footage. Environments can range from fantasy worlds to real-world locations that are modified or enhanced for storytelling purposes. A convincing environment is crucial to immersing the viewer in the story and can be a key factor in the success of a visual effects-heavy production.

E·X·R

— /i:əks ɑːr/

EXR stands for "OpenEXR" which is a high-dynamic-range image file format that is commonly used in visual effects and computer animation. EXR files are capable of storing multi-layer images, 16-bit and 32-bit pixels, and provide a lossless compression, making them a popular choice for compositing, color grading, and other post-production tasks.

FLAME

— /fleɪm/

It is a high-end compositing and finishing software used in visual effects and post-production workflow, which offers advanced tools for color grading, 3D compositing, and visual effects creation.

FO·CAL LENGTH

— /ˈfəʊkəl lɛŋ(k)θ/

Focal length refers to the distance between the center of a lens and its focus point. In VFX, the focal length of a camera lens is important because it affects the way that objects appear in the final image. Specifically, the focal length can impact the perspective distortion, depth of field, and overall composition of the shot.

FRAME

— /freɪm/

A single image in a sequence of images that make up a video or animation.

FRAME RATE

— /freɪm reɪt/

It is the rate at which images or frames are displayed per second (fps) in a video or animation, which determines the smoothness and perceived motion of the footage. For example, films are typically shot and projected at 24 fps, while television and online content are often produced at 25 or 30 fps. A higher frame rate generally results in smoother and more fluid motion, while a lower frame rate may appear choppy or stuttering.

F·TRACK

— /ˈef træk/

FTrack is a project management and production tracking software that is widely used in the visual effects industry. It is designed to help VFX studios and artists manage their projects, assets, and workflows more efficiently, from pre-production to post-production.

FULL C·G

— /fʊl siː dʒi/

Full CG (Computer Graphics) refers to the process of creating visual effects entirely with computer-generated imagery (CGI). In other words, all of the elements in the shot are created using digital tools such as 3D software, without the use of practical effects or live-action footage.

F·X SIM·U·LA·TION

— /ɛf'ɛks ˌsɪm.jʊˈleɪ.ʃən/

Generating visual effects through the use of software simulations. This involves creating a virtual environment, defining the physical properties of objects within that environment, and then simulating how those objects interact with each other.For example, an FX simulation could be used to create the visual effects of a collapsing building, smoke and fire, as well as the behaviour of fluids or fur in a computer-generated environment.

GAM·MA

— /ˈɡamə/

The relationship between the brightness levels of an image and the amount of light that was used to capture or display it. Gamma is an important concept in VFX because it affects how images are displayed and how they are perceived by viewers.Gamma correction is a technique used to adjust the gamma of an image to match the expected display conditions. This is important because different devices and display systems may have different gamma characteristics, making it difficult to accurately judge their true appearance.

GAUSS·I·AN SPLAT·TING

— /ˈɡaʊsɪən splatːɪŋ/

Gaussian splatting is a technique used to efficiently render and visualize 3D point cloud data onto a 2D image or surface. This technique is particularly useful for representing complex and detailed scenes, such as particle systems, volumetric data, or point-based rendering.In Gaussian splatting, each point in the 3D point cloud is projected onto the 2D image or surface using a suitable projection method. At the projected position on the image or surface, a Gaussian distribution or "splat" is generated around the point. The size and shape of the Gaussian distribution are determined by parameters such as the standard deviation or radius, which control the extent of the influence of the point.

The Gaussian distributions from all points in the 3D point cloud are then accumulated or blended together at each pixel or location on the image or surface. This accumulation process results in the final image or representation of the point cloud.

Gaussian splatting is interchangeable with the term "NERF".

GLAZE

/ɡleɪz/

Glaze is a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

GLITCH

— /glɪ́ʧ/

A temporary or random malfunction in a visual effect or digital image, often resulting in a distortion or disruption of the image or effect. Glitches are sometimes used intentionally in VFX as a stylistic choice, such as to create a digital distortion effect, but they can also be an unwanted artifact that needs to be corrected or removed. Glitches can be caused by a variety of factors, such as compression, data corruption, or rendering errors.

GRAIN

— /ɡreɪn/

The individual particles of silver halide in a piece of film that capture an image when exposed to light. Because the distribution and sensitivity of these particles are not uniform, they are perceived (particularly when projected) as causing a noticeable graininess. Different film stocks will have different visual grain characteristics.

GREEN SCREEN

— /ɡriːn skriːn/

Literally, a screen of some sort of green material that is suspended behind an object for which a matte is to be extracted. Ideally, the green screen appears to the camera as a completely uniform green field. You use it, to be able to cut out the objects before the greenscreen and set them into a different environment.

GREEN SPILL

— /ɡriːn spɪl/

Any contamination of the foreground subject by light reflected from the green screen in front of which it is placed.

HAN·DLES

— /ˈhandls/

Extra frames at the beginning and end of a shot that are not intended for use in the final shot but are included in the composite in case the shot's length changes slightly.

HIGH DY·NA·MIC RANGE IM·AGE [H.D.R.I.]

— /hʌɪ dʌɪˈnamɪk reɪn(d)ʒ ˈɪmɪdʒ/

A technique for capturing the extended tonal range in a scene by shooting multiple pictures at different exposures and combining them into a single image file that can express a greater dynamic range than can be captured with current imaging technology.

HOU·DI·NI

— /huːˈdiːniː/

Houdini is a 3D procedural software where you can perform modeling, animation, VFX, lighting, rendering, and more.

I·MAX

— /ˈʌɪmaks/

The term IMAX stands for "Image MAXimum", referring to the system's ability to produce extremely high-quality and detailed images that are much larger than those of traditional cinema projection systems.

IN-CAM·ER·A EF·FECT

— /ˌɪn ˈkam(ə)rə ɪˈfɛkt/

In-Camera Effects are special effects achieved exclusively through the use of the camera at the time of recording by manipulating the camera itself or tweaking its basic settings without the use of VFX. Sometimes a combination of both in-camera effects and VFX can achieve the best possible outcome.

IN·TER·LA·CING

— /ˌɪntəˈleɪsɪŋ/

The technique used to produce video images whereby two alternating field images are displayed in rapid sequence so that they appear to produce a complete frame.

IN·TER·PO·LA·TION

— /ɪnˌtəːpəˈleɪʃn/

The process of estimating values for unknown data points that fall between known data points. This is commonly used in VFX for tasks such as:1. Frame interpolation: When a video clip has a lower frame rate than desired, interpolation can be used to create new frames in between the existing frames to create a smoother motion.

2. Camera tracking: In camera tracking, interpolation can be used to estimate the position and orientation of the camera at intermediate points between keyframes.

3. 3D animation: In 3D animation, interpolation is used to generate smooth transitions between keyframes for the movement of objects or characters.

I·TER·A·TION

— /ˌɪtəˈreɪʃn/

Another word for feedback rounds. Iterations are necessary during the process to improve the quality of the end product.

JIT·TER

— /ˈdʒɪtə/

It refers to a sudden or undesired movement in the footage that appears shaky or unstable. VFX artists use tools and techniques to stabilize the footage and reduce jitter.

JOINT

— /dʒɔɪnt/

In 3D animation, a joint is a connection point between two or more objects that allows them to move and rotate in relation to each other. Joints are often used to create complex movements in characters or mechanical objects.

J·PEG

— /dʒeɪpɛg/

A (typically lossy) compression technique or a specific image format that utilizes this technique. JPEG is an abbreviation for the Joint Photographic Experts Group.

KEY FRAME

— /kiːfreɪme/

In animation, a keyframe is a significant point in the animation timeline where the animator defines the start or end of an action, a pose, or a change in the movement of the object being animated. It is a reference point or a signpost that informs and guides the movement of objects or the flow of frames in an animation. Keyframes are essential in creating smooth and realistic animation because they help in defining the motion path and timing of objects or characters in a scene.

KEY·ING

— /kiːɪŋ/

Keying is the process of removing or isolating a specific color or range of colors from a video or image. This can be done using a specific color keying tool in a video editing or compositing software, which allows the user to remove a background by choosing a specific color, such as green or blue, and keying it out. Once the background is removed, the foreground subject can be placed onto a new background or layered with other elements for a more complex scene. Keying is commonly used in film and TV production for creating special effects, such as chroma keying for green screen shots or rotoscoping for fine-tuned masking of objects in motion.

LAY·ER

— /ˈleɪə/

In VFX, a layer is a single element of an image or video that can be manipulated separately from other layers. Layers are stacked on top of each other in a composition, with the top layer being the most visible. By organizing images or footage into layers, VFX artists can apply effects, masks, and color grading to specific layers, without affecting other layers or the overall composition.

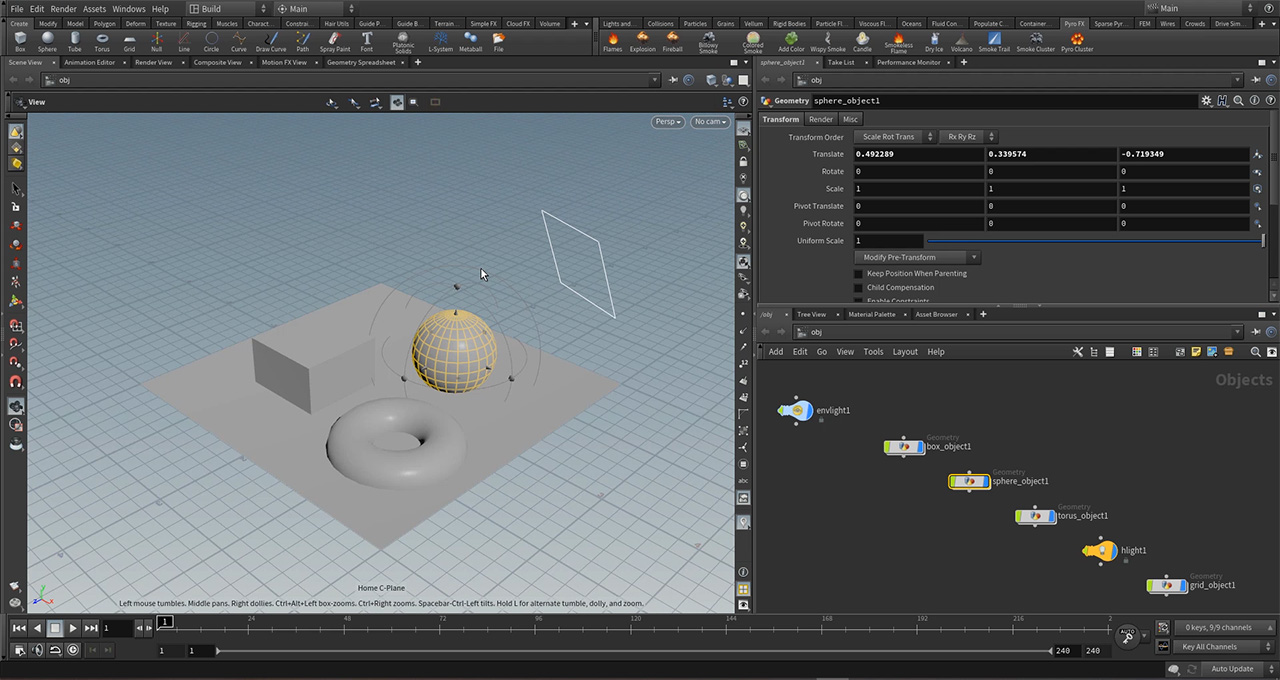

LAY·OUT

— /ˈleɪaʊt/

Layout, also known as composition, refers to the arrangement of visual elements in a scene. In VFX, layout involves positioning each element, such as characters, props, and digital environments, in the correct location and distance from the camera to create a believable setting. It includes determining the camera placement, the placement of the actors or objects, and the lighting of the scene.

LENS FLARE

— /lɛnz flɛː/

An artifact of a bright light shining directly into the lens assembly of a camera.

LENS GRID

— /lɛnz ɡrɪd/

A lens grid is essentially a reference tool filmmakers use to help align multiple cameras during a shoot or to perfectly match the perspective of computer-generated environments with live-action footage. It's essentially a matte board with a series of intersecting lines equally spaced apart that can help make the distortion of each lens visible and line things up correctly.

LET·TER·BOX

— /ˈlɛtə bɒks/

A black or colored bar that appears on top and bottom or sides of an image when the actual aspect ratio is not fitting to the screen.

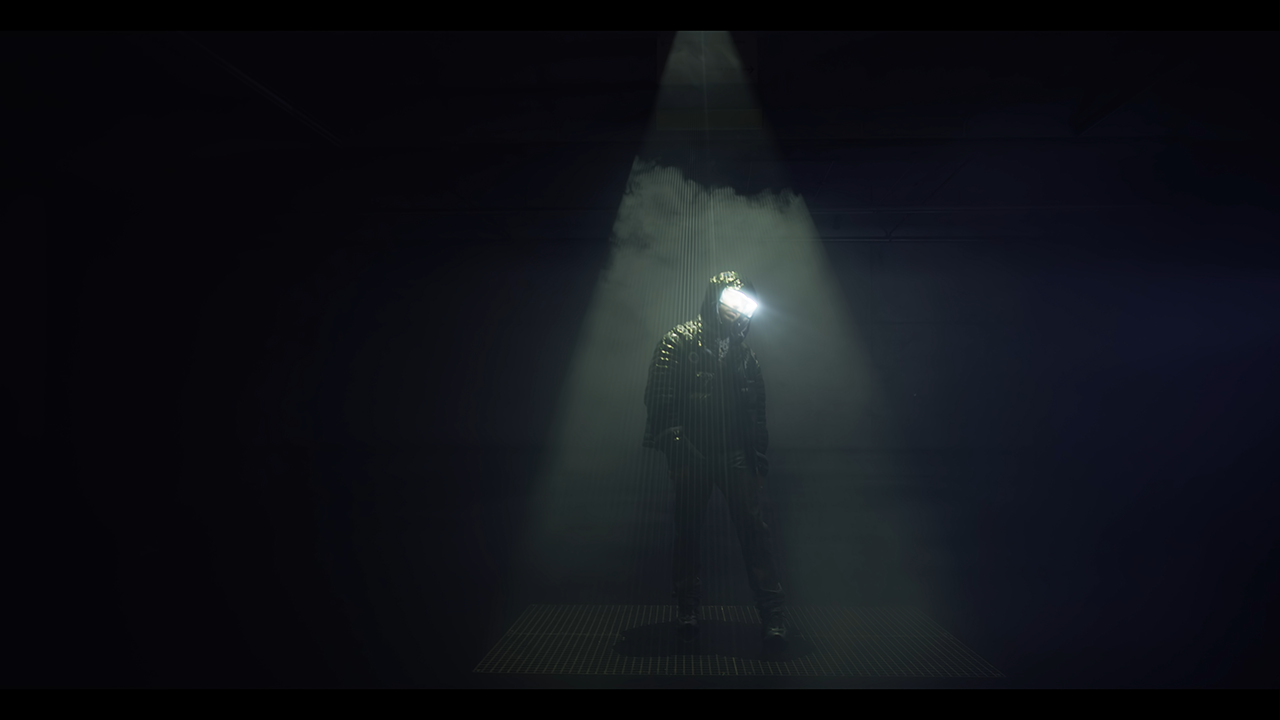

LIGHT·ING

— /ˈlʌɪtɪŋ/

In VFX, lighting is the process of adding light sources to create a certain atmosphere or mood in a scene, or to highlight a specific object or character. Proper lighting can make an animation or visual effect appear more believable and enhance the realism of the scene. It can also create a feeling of depth, help direct attention to key elements in the scene and help set the mood for the shot. Lighting involves the precise placement and control of different light sources, such as spotlights, ambient lighting, and shadows, to create the desired effect.

LOCKED CAM

— /lɒkt kam/

It refers to a camera that is placed on a tripod or held steady throughout the shot to avoid any movement or shaking. This technique is used for stable and composed shots.

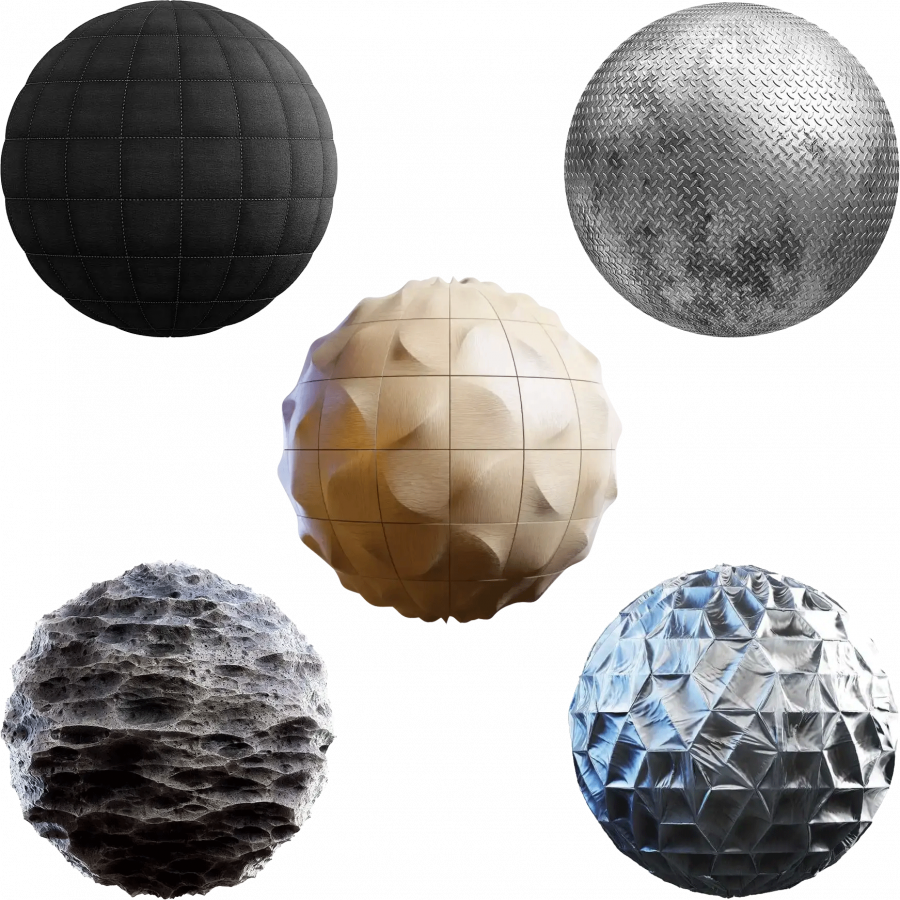

LOOK DE·VE·LOP·MENT

— /lʊk dɪˈvɛləpm(ə)nt/

LookDev is a process of building up and defining the look of CG characters and objects on the screen using texturing, lighting, coloring, grooming, and other steps that help the artists to make CG elements come to life.

LOOK·UP TA·BLES [L.U.T.]

— /lʊk'ʌp ˈteɪbls/

It is a pre-defined color table that is used to transform the color and tone of an image or footage. LUT can be used for color correction, grading, and matching of shots in a sequence.

LU·MA KEY

— /ˈluːma kiː/

It is a technique used for separating the subject from the background based on brightness or luminance values. Luma keying is commonly used for green screen or compositing shots.

MATCH MOVE

— /matʃ muːv/

The process of extracting the camera move from a live-action plate in order to duplicate it in a CG environment. A matchmove is often created by hand as opposed to 3D tracking in which special software is used to help automate the process.

MATTE PAINT·ING

— /mat ˈpeɪntɪŋ/

From the small to the large, sometimes you need to create entire landscapes. You may be able to use a matte painting, which is a 2D, digitally drawn background that can be added to your scene. Before digital production became the industry standard, matte paintings were painted onto glass. The paint techniques used now are created using digital software.

MESH

— /mɛʃ/

The actual surface of every digital object. It is build out of single polygon connected to each other.

MOCK·UP

— /mɒk'ʌp/

A preliminary or rough setup of a design or object used for testing or evaluation purposes.

MO·DEL·ING

— /ˈmɒdlɪŋ/

The process of designing and creating a 3D digital model of an object, character or environment in computer graphics.

MOI·RÉ EF·FECT

— /mwaˈɹeɪ əˈfɛkt/

The moiré effect is a visual phenomenon that occurs when two similar patterns are overlaid or placed near each other, creating a new pattern with a distorted, rippled appearance. For example: Filming an LED-wall in a specific distance and angle will result in this effect.

MOOD

— /muːd/

The emotional atmosphere or tone conveyed by a visual image or scene.

MORPH·ING

— /mɔːfɪŋ/

A special effects technique used to transform one image or object into another through a sequence of smoothly blended transitions.

MO·TION BLUR

— /ˈməʊʃn bləː/

A visual effect that occurs when objects in motion appear blurred or smeared in a photograph or video.

MO·TION CAP·TURE [MOCAP]

— /ˈməʊʃn ˈkaptʃə/ [/ˈməʊka/]

A technique used to capture the movement of live actors or objects and transform it into a digital form for use in animation and visual effects.

MO·TION CON·TROL [MOCO]

— /ˈməʊʃn kənˈtrəʊl/ [/ˈməʊkəʊ/]

A system for controlling the movement of cameras and other objects during filming or motion capture, often used in conjunction with computerized tracking and animation systems.

NEU·RAL RA·DI·ANCE FIELDS [NERFs]

— /ˈnjəɹəl ˈreɪdiːəns fiːldz/ [/nəːfs/]

NERFs are a type of 3D reconstruction technique that uses deep learning to generate high-quality 3D models of real-world scenes or objects from 2D images or videos.

NIGHT·SHADE

/ˈnʌɪtʃeɪd/

Nightshade is designed to counteract the unauthorized use of artists' work by AI companies for training their models. By "poisoning" this training data, Nightshade aims to disrupt the effectiveness of future iterations of image-generating AI models like DALL-E, Midjourney, and Stable Diffusion. This interference could lead to outputs from these models being rendered inaccurate or unusable, such as dogs being misinterpreted as cats or cars being mistaken for cows.

NOISE

— /nɔɪz/

Refers to random variations in brightness or color that can occur in images or video. These variations can be caused by factors such as low light levels, high ISO settings, or digital compression.However, it can also be used intentionally to create certain effects. For example, adding noise to a texture can give it a more organic or analog appearance.

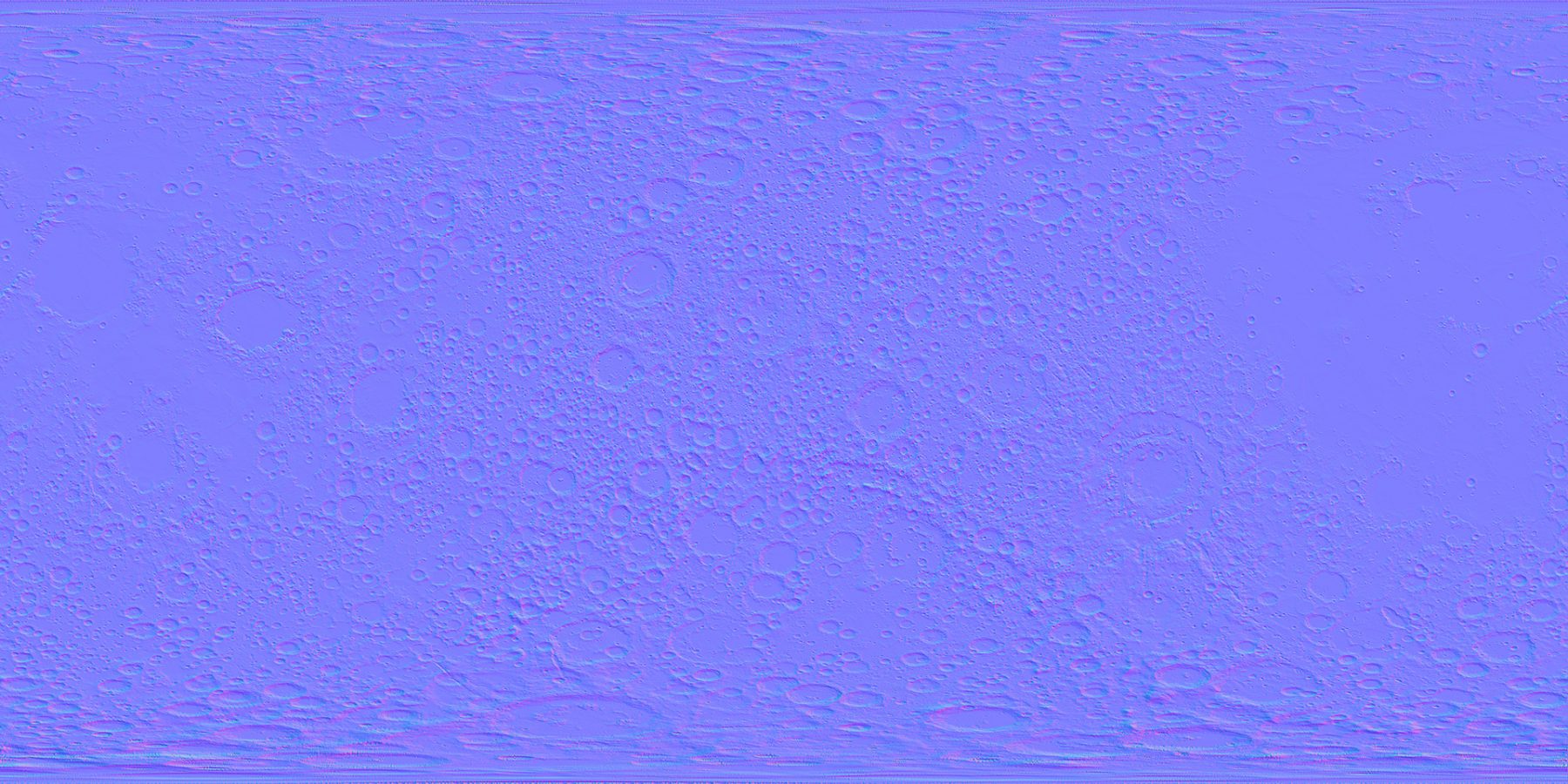

NOR·MAL MAP

— /ˈnɔːml map/

It is a texture map used to add more detail to a 3D object or surface without increasing the polygon count.

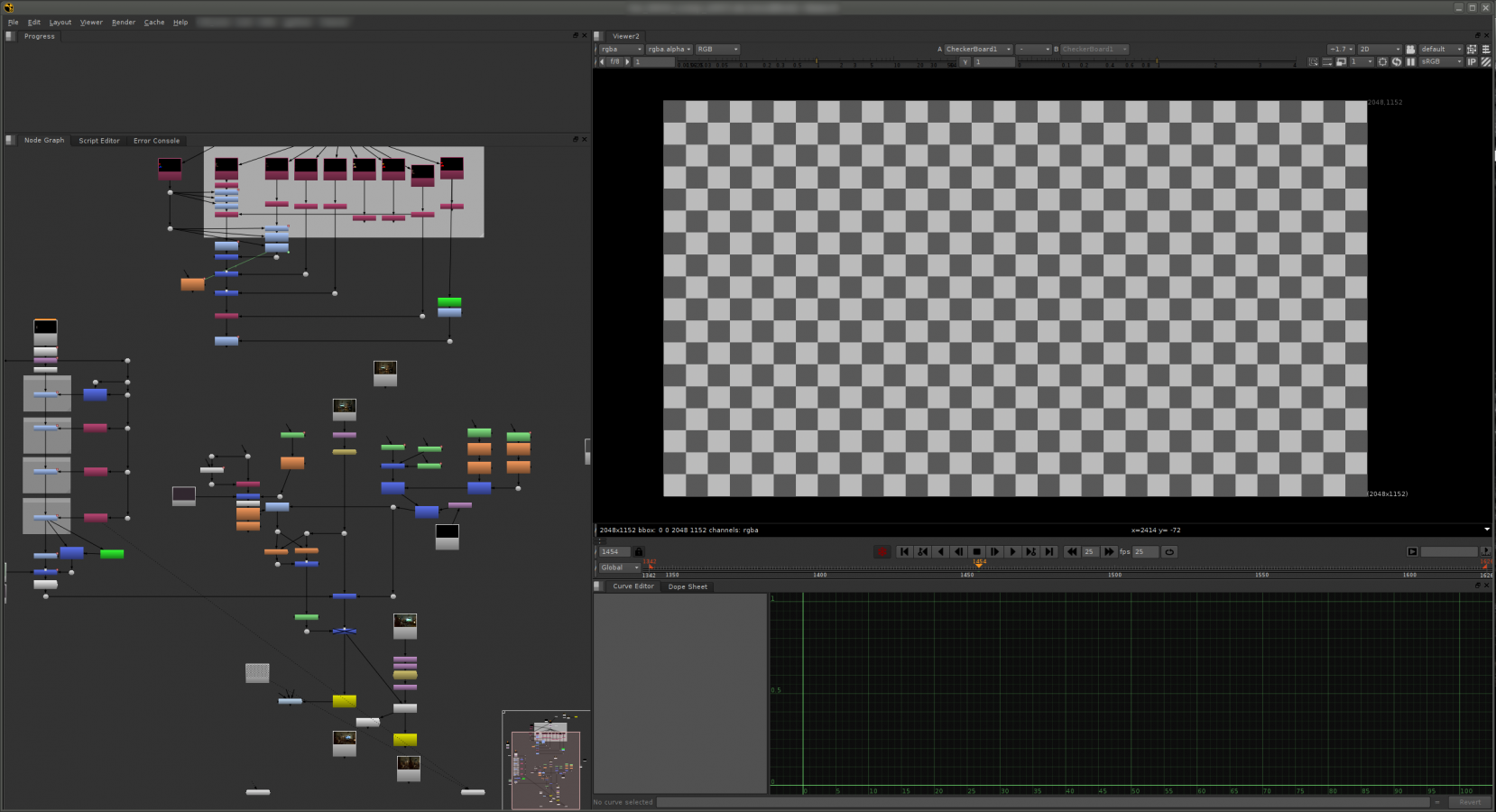

NUKE

— /njuːk/

It is a powerful node-based compositing software used for VFX and post-production. It is widely used in the film and TV industry.

OFF·LINE

— /ɒfˈlʌɪn/

The process of editing and assembling the first cut of the footage, without any VFX or final color grading. Offline editing is used to create a rough cut or assembly of the footage.

ON·LINE

— /ɒnˈlʌɪn/

The final process of finishing and conforming the edited footage with VFX, color grading, and final sound mix. Online is the final stage of post-production.

O·PAQUE

— /ə(ʊ)ˈpeɪk/

Property of a material or object that does not allow light to pass through, making it appear solid or not transparent.

PACK·SHOT

— /pakʃɒt/

It is a type of shot used to showcase or promote a product, where the camera focuses on the product or packaging.

PAIN·TING IN

— /ˈpeɪntɪŋ ɪn/

It is a technique used for removing or adding elements or details in a shot using digital painting or retouching.

PA·RAL·LAX

— /ˈparəlaks/

Parallax is defined as the perceptual difference in an object’s location or spatial relationship when seen from different vantage points. Parallax is an effect which can be used to add more depth to 2D shots. You can adjust focus and depth of field to make certain elements appear closer to or further away from the camera.

PHO·TO· GRAM·ME·TRY

— /ˌfəʊtə(ʊ)ˈɡramɪtri/

A process of capturing highly detailed and precise images and measurements of the physical environment using digital photography.

PIC·TURE LOCK

— /ˈpɪktʃə lɒk/

A stage in the post-production process where the edit, including cuts and timing, of a movie or video is approved, meaning no further changes will be made to the visuals.

PIPE·LINE

— /ˈpʌɪplʌɪn/

A pipeline is the generic term used to describe a set of processes for achieving a certain result. It is most commonly used to describe the VFX pipeline. The VFX pipeline covers all the processes from pre-production through to post-production and delivery. It involves many things in this glossary, including previs, matte painting, and tracking. Creating a robust and efficient pipeline is a key part of developing a successful VFX company.

PIX·EL·AT·ION

— /ˈpɪksleɪʃn/

This term refers to the visual effect that occurs when the individual pixels that compose an image become visible. Pixelation can occur for a number of reasons, such as when an image is enlarged beyond its original size or when compression algorithms reduce the resolution of an image.

PLATE

— /pleɪt/

The original footage that is used as a background for a visual effects shot. The plate is usually shot on location or in front of a green screen, and it serves as the canvas on which any digital elements will be added.

PLAY·BLAST

— /pleɪblɑːst/

A quick preview of an animation or visual effects shot that is generated by the software used to create the shot. A playblast is usually a low-resolution version of the final shot that can be reviewed quickly by the artist or supervisor.

P·N·G

— /pi:ənˈʤiː/

Portable Network Graphic. This term refers to a type of image file format that is commonly used in digital graphics and visual effects. PNG files support transparency and can be saved with a high degree of compression without losing image quality.

POINT CLOUD

— /pɔɪnt klaʊd/

A collection of individual points in three-dimensional space that are used to represent the surface of an object. Point clouds are often used in 3D scanning and modeling to capture the geometry of real-world objects.

PO·LY·GON

— /ˈpɒlɪɡ(ə)n/

This term refers to a flat, two-dimensional shape that is composed of three or more straight lines. In 3D graphics, polygons are used to create the surfaces of 3D models by connecting a series of vertices or points in three-dimensional space.

POST·VIS

— /pəʊst'vɪz/

A type of visual effects shot that is generated after principal photography has been completed. Postvis shots are used to help directors and editors visualize complex or challenging sequences before expensive post-production work is undertaken.

PRE·GRA·DING

— /pri:ɡreɪdɪŋ/

It is a process of color correction and grading done before the final grade or online. It is used to create a rough grade and look for the footage.

PRE·MUL·TI·PLI·CA·TION

— /pri:mə́ltəplɪkɛ́jʃən/

Premultiplication refers to a technique used to combine different elements of an image or video together. It is also known as premult, precomp, or pre-multiplication. When working with VFX, you often have multiple layers or elements that need to be combined to create the final image. These elements can include things like characters, objects, backgrounds, and special effects. Each element may have transparent areas around its edges. Premultiplication helps in correctly blending these elements together while preserving their transparency.

PRE·VIS

— /pri:'vɪz/

Previs is a collaborative process that generates preliminary versions of shots or sequences, predominantly using 3D animation tools and a virtual environment. Previs is used by filmmakers to explore creative ideas, plan technical solutions to shooting, and to help the whole production team visualise how finished 3D elements will look in the final project ahead of final animation being completed.

PRO·JEC·TION MAP·PING

— /prəˈdʒɛkʃn 'mapɪŋ/

It is a technique used to project digital content on a physical object or surface, creating an illusion of 3D effects or animations. It is commonly used for advertising, events, and exhibitions.

PROMPT

— /pɹɒmpt/

In the context of AI, a prompt typically refers to a textual or visual input provided to a language model or other machine learning model that is designed to generate a response or output.A prompt can be as simple as a few words or as complex as a lengthy paragraph, and it is typically designed to elicit a specific type of response from the AI model. For example, a prompt could be a question, a statement, or a series of keywords that are used to guide the model's output.

Q·TAKE

— /kjuːˌteɪk/

QTAKE is a professional video assist software designed for use on film sets and in production environments. It is primarily used for real-time video playback and recording, allowing directors, cinematographers, and other production personnel to monitor and evaluate footage as it is being shot. QTAKE offers a range of features such as advanced playback controls, live grading, multi-camera support, and integration with various camera systems.

QUES·TION

— /ˈkwɛstʃ(ə)n/

If you have any questions, or if you have suggestions for additional words, please don't hesitate to reach out to us at: mail@spc.co.

REAL·TIME

— /ˈrɪəl ˌtʌɪm/

The process of generating and displaying images or animations in real-time, without any significant delay. It refers to the immediate generation of computer graphics images based on user input, enabling the user to interact with the visual content without delays.

RE·FE·RENCE

— /ˈrɛf(ə)rəns/

In VFX, reference refers to the collection of images, videos, or visual aids used to guide the creation of digital assets or effects. It is a means ensuring that you are getting to the results everyone imagined.

RE·FRAC·TION

— /rɪˈfrakʃn/

An optical phenomenon that occurs when light passes through a transparent material such as water, glass or crystal, bending and changing direction. Refraction is commonly used in VFX to simulate light passing through different materials, resulting in the distortion or distortion of the image.

REN·DER EN·GINE

— /ˈrɛndə ˈɛn(d)ʒ(ɪ)n/

It is a software component that is responsible for creating digital images from a 3D model or scene. The render engine produces the final output by performing complex calculations that simulate lighting, shading, reflections, refractions and other visual effects.

REN·DER PASS

— /ˈrɛndə pɑːs/

The individual component of a final rendered image. Each pass represents a particular aspect of the final image, such as diffuse, specular, reflection, or shadow. Render passes enable VFX artists to manipulate individual components of the compositing process.

REN·DER·ING [REN·DER FARM]

— /ˈrɛndərɪŋ/

Rendering refers to the process of generating a final image or animation sequence from a 3D model or scene. A render farm is a high-performance computing environment designed to support the rendering of complex graphics or visual effects.

RIG·GING

— /ˈrɪɡɪŋ/

Rigging is the process of creating the digital skeletal structure and control systems that allow 3D models to be manipulated and animated. This process involves defining the various joints, bones, and controls that will be used to move the model in various ways.

RO·TO·SCOPE

— /ˈrəʊtəskəʊp/

A rotoscope was originally the name of a device patented in 1917 to aid in cel animation. It is now used as a generic term for ‘rotoing’. This is the process of cutting someone or something out of a more complex background to use them in another way. For example, you might want to put a VFX explosion behind your actors as they walk away from it towards the camera. You would need to rotoscope them out of the shot so you can place the explosion behind them but in front of the background scenery.

SAM·PLES

— /ˈsɑːmpls/

In computer graphics and VFX, samples refer to the number of times a ray is traced to calculate the final image. More samples result in a smoother and less noisy image, but also take longer to render.

SEN·SOR SIZE

— /ˈsɛnsə ˈsaɪz/

Sensor size refers to the physical size of the image sensor in a camera. A larger sensor size typically provides better image quality, especially in low-light conditions, and also allows for a shallower depth of field.

SHA·DING

— /ˈʃeɪdɪŋ/

Shading refers to the process of creating the appearance of 3D objects by applying textures, materials, and lighting to their surfaces. This can involve complex calculations to simulate how light interacts with different materials and surfaces.

SHOT-BASED

— /ʃɒt beɪst/

Shot-based refers to a production workflow that divides the visual effects work into individual shots, each with its own set of tasks and deadlines.

SI·MU·LA·TION

— /ˌsɪmjʊˈleɪʃn/

Simulation is the process of creating a digital model that mimics real-world physics, such as the behavior of fluids, cloth or particles. These simulations are often used in VFX to create dynamic and realistic effects.

SKIN·NING

— /skɪn'ɪŋ/

Skinning is the process of attaching a 3D model to a rig or skeleton, as part of the rigging process, allowing it to be animated by a character animator.

SLATE

— /sleɪt/

The slate is a tool used on film sets to mark and identify scenes and takes, reducing the chance of confusion or errors during post-production.

SPE·CIAL EF·FECTS [S.F.X.]

— /ˈspɛʃl ɪˈfɛkts/

Special effects. While these aren’t visual effects, it’s worth defining how the two are different. While visual effects are digitally created assets, special effects are real effects done on set – for example, explosions or stunts. It can also include camera tricks or makeup. People often confuse SFX and VFX.

SPEED RAMP

— /spiːd ramp/

Speed ramping is a technique used in editing and VFX that involves changing the speed of footage over time, creating a slow-motion or fast-motion effect.

SPLINES

— /splʌɪns/

Splines are mathematical curves used to create and manipulate shapes, surfaces, and animations in a 3D environment. They are used extensively in 3D modeling, animation, and compositing software to create smooth, organic shapes and movements.

SQUEE·ZING

— /skwiːzɪŋ/

Squeezing refers to the process of compressing or stretching footage horizontally or vertically, often used to correct distortion or to fit footage of different aspect ratios together. This is mainly used while working with anamorphic material.

STITCH·ING

— /ˈstɪtʃɪŋ/

Stitching involves combining multiple images or video streams together to create a larger, seamless image or video. This technique is often used in VR and 360-degree video production.

STYLE·FRAME

— /stʌɪl freɪm/

A styleframe is a still image that represents the intended look and feel of a VFX shot or project. It may include elements such as lighting, textures, and compositing to convey the desired visual style.

SUB·SUR·FACE SCAT·TER·ING [S.S.S.]

— /sʌb ˈsəːfɪs ˈskat(ə)rɪŋ/

Subsurface scattering is a technique used in VFX to simulate the way light penetrates and scatters inside translucent materials, such as skin or wax.

SU·PER

— /ˈsuːpə/

Super to a graphic or text overlay that appears on top of the video footage to convey information such as product names, logos or taglines.

TECH·NI·CAL DI·REC·TOR [T.D.]

— /ˈtɛknɪkl dɪˈrɛktə/

The person responsible for overseeing the technical aspects of a particular project or production, including technical design, implementation, and execution.

TEX·TUR·ING

— /ˈtɛkstʃərɪŋ/

The process of applying a texture or color to a 3D model to make it look more realistic and detailed.

T·G·A

— /ˈtiːʤɪ́ˈeɪ/

The Targa Image File Format (short: TGA, usual file extension: .tga) is a file format for storing images, and is an alternative option to TIFF, EXR or PNG. TARGA stands for "Truevision Advanced Raster Graphics Array". The TARGA file format was originally developed by Truevision in 1984.

TI·MING

— /ˈtʌɪmɪŋ/

A document that defines important steps in the process of making a film, communicating the dates of all the important steps. In VFX this is focused on interim checks, feedback rounds and delivery dates.

TRACK·ING

— /ˈtrakɪŋ/

Tracking is the process of determining the movement of objects in a scene (relative to the camera) by analysing the captured footage of that scene. 2D tracking is dependent on tracking points in the image. These can be tracking markers or points on objects being tracked.

TRAN·SI·TION

— /tranˈzɪʃn/

A technique used in editing to smoothly move between two different scenes or shots, often using fades or dissolves.

TURN·O·VER

— /ˈtəːnˌəʊvə/

The point at which one production department hands over their work to another department to continue on the production process.

UN·REAL EN·GINE

— /ˌʌnˈriːəl ɛ́nʤɪn/

A popular real-time 3D engine used for creating interactive experiences and games, as well as for VFX production.

UP·SCALE

— /ˌʌpˈskeɪl/

It means increasing the resolution or size of an image or video by using an algorithm or specific software. The process of upscaling is used to improve the quality of a lower resolution image or video. For example, if a video has 720p resolution, upscaling will increase it to 1080p, 4K or higher. While upscaling cannot increase the original image or video's detail, it can make it appear sharper and clear.

US·ER IN·TER·FACE [U.I.]

— /ˈjuːzə ˈɪntəfeɪs/

The graphical interface of a software program that allows users to interact with and control the program.

U·V MAP·PING

— /ʉːv ˈmæpɪŋ/

The process of projecting a 2D image onto a 3D model's surface.

U·V UN·WRAP·PING

— /ʉːv ʌnˈrapɪŋ/

It is a process of mapping a 3D model's surface onto a 2D plane or image. In other words, it is the process of flattening a 3D surface to a 2D surface to be able to apply textures, colors or designs on it. This technique is essential for a VFX artist to apply textures and materials on 3D models accurately.

VEC·TOR GRAPH·IC

— /ˈvɛktə ˈɡrafɪk/

A type of digital artwork that is created using mathematical equations and lines, which can be scaled up or down without losing crispness and quality.

V·F·X SU·PER·VI·SOR

— /ˈviːˌɛf'ɛks ˈsuːpəvʌɪzə/

A VFX Supervisor is the head of a VFX project who manages the work of the creative team from the early stages of preproduction all the way to the final steps of post-production. VFX supervisors are a link between a VFX studio and the director or producer of a movie/TV show.

VIR·TU·AL PRO·DUC·TION

— /ˈvəːtʃʊəl prəˈdʌkʃn/

Virtual production is a technique used in VFX to create and capture live-action footage using virtual environments and real-time rendering technologies. The goal of virtual production is to enable filmmakers and VFX artists to create high-quality visual effects and complex scenes more efficiently and with greater control over the final result.In a virtual production pipeline, live actors are typically filmed on a physical set or stage that is surrounded by LED screens or projection surfaces. These screens display digital backgrounds and elements in real-time, allowing the filmmakers to see how the final shot will look as it is being filmed. The virtual environments are typically created using 3D modeling and animation software, and they can be customized and modified on the fly to match the needs of the scene.

VIR·TU·AL RE·A·LI·TY [V.R.]

— /ˈvəːtʃʊəl rɪˈalɪti/

A computer-generated environment that simulates a user's physical presence within a digital or simulated world.

VIS·U·AL EF·FECTS [V.F.X.]

— /ˈvɪzjʊəl ɪˈfɛkts/

Visual Effects, commonly referred to as VFX, are the processes of creating, manipulating or enhancing imagery for films, television shows, video games, and other media. VFX are used to create environments, characters, creatures, objects, and special effects that cannot be captured during live-action filming.

VOL·UME

— /ˈvɒljuːm/

Volume refers to a 3D space that contains some form of physical material or effect, such as smoke, fire, dust, or fog. They allow VFX artists to create complex, realistic effects that can be animated and rendered in a 3D environment.

WARP·ING

— /wɔːpɪŋ/

Warping is a technique used in VFX to distort or manipulate an image or video clip. It is commonly used to correct lens distortion, or to create effects such as morphing or stretching objects in a scene.

WIRE RE·MO·VAL

— /ˈwʌɪə rɪˈmuːvl/

Wire removal is a VFX technique used to remove wires or cables that are visible in a shot. It is commonly used in film and television production to remove wires that are holding up props or to remove safety wires that are used to secure actors during stunts.

WIRE·FRAME

— /ˈwʌɪə'freɪm/

A wireframe is a visual representation of a 3D object or scene, created using lines or edges to represent the shape and form of the object. It is commonly used in 3D modeling and animation to help artists visualize and manipulate the geometry of a scene. Wireframes are often used as a reference for building and animating more complex 3D objects.

WORK·FLOW

— /ˈwɜːkfləʊ/

The process or sequence of tasks involved in the creation of VFX, from pre-production to post-production. It defines the order of things happening during the process. For example: does the grading happen before or after the VFX?

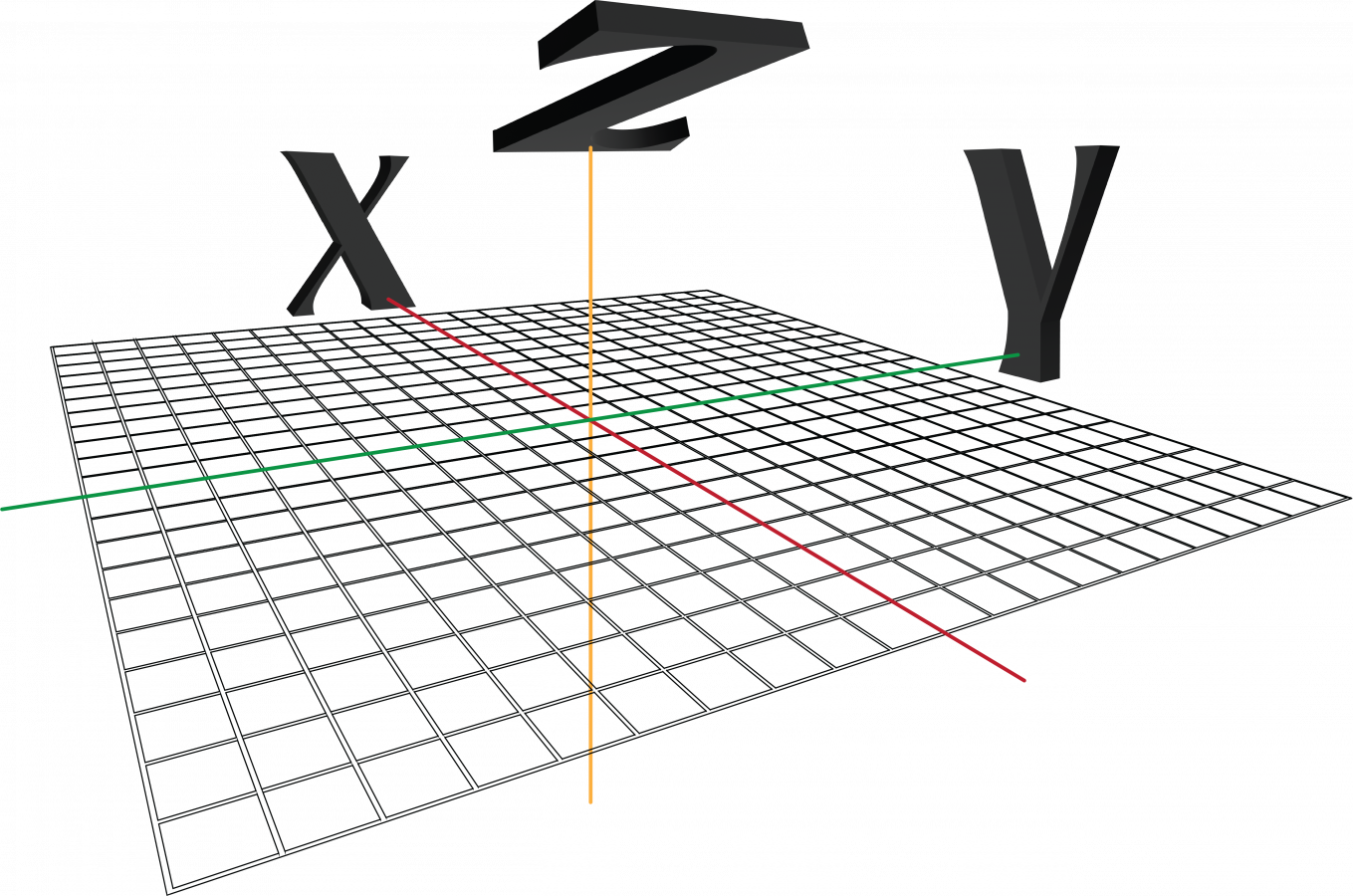

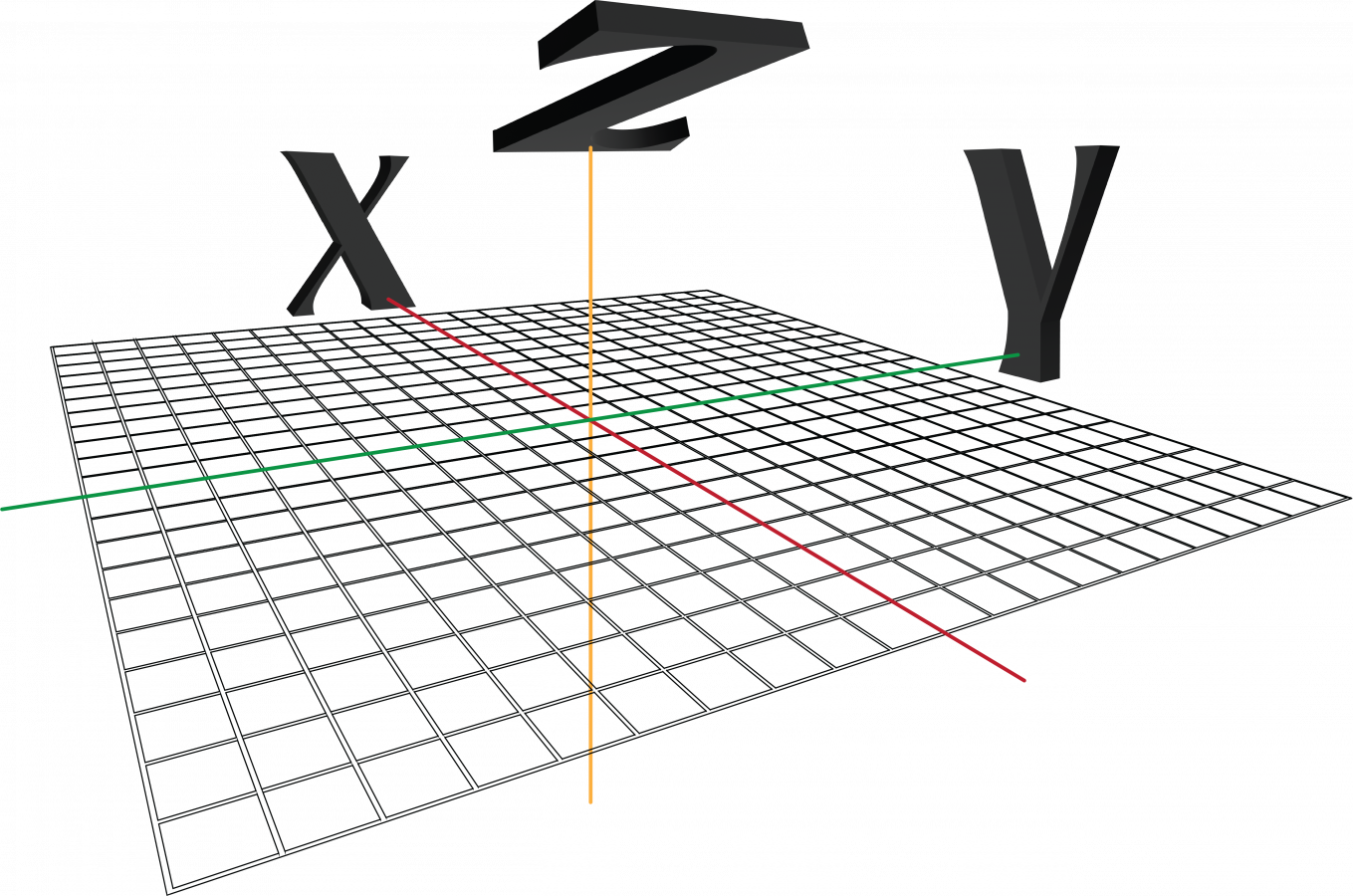

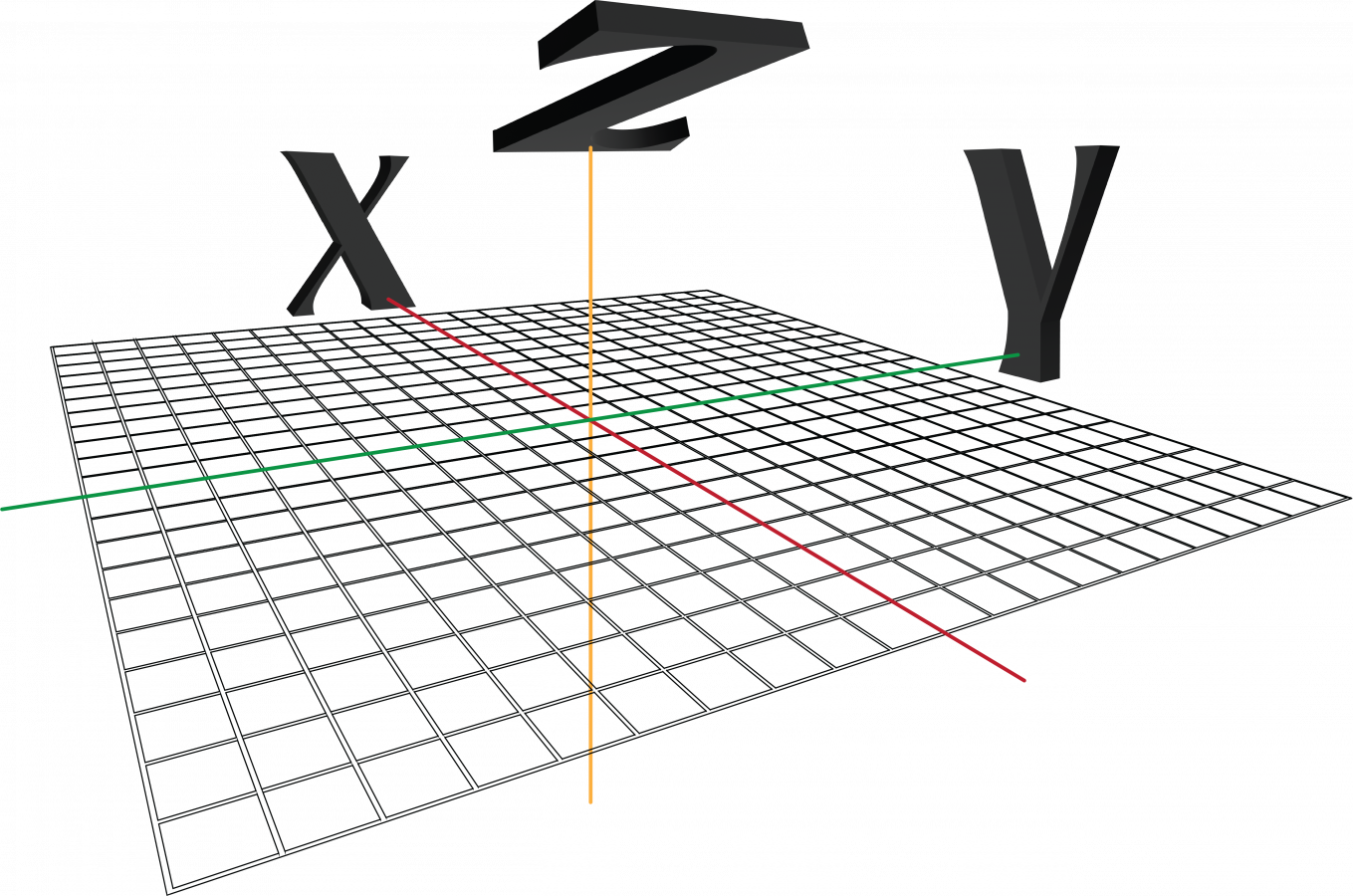

X-MA·TRIX

— /ɛks ˈmeɪtrɪks/

An X-Y-Z matrix is a three-dimensional structure whereby the x-axis and y-axis denote the first two dimensions and the z-axis is the third dimension. In a graphic image, the x denotes width, y denotes height and the z represents depth.

Y-MATRIX

— /wʌɪ ˈmeɪtrɪks/

An X-Y-Z matrix is a three-dimensional structure whereby the x-axis and y-axis denote the first two dimensions and the z-axis is the third dimension. In a graphic image, the x denotes width, y denotes height and the z represents depth.

Z-MATRIX

— /ziː ˈmeɪtrɪks/

An X-Y-Z matrix is a three-dimensional structure whereby the x-axis and y-axis denote the first two dimensions and the z-axis is the third dimension. In a graphic image, the x denotes width, y denotes height and the z represents depth.

- A.O.V.

- ACES

- ALPHA CHANNEL

- ANIMATION

- ANTICIPATION

- APPROVAL

- ARTIFACT

- ARTIFICIAL INTELLIGENCE (A.I.)

- ASPECT RATIO

- ASSET

- AUGMENTED REALITY (A.R.)

- BAKING

- BLENDER

- BLOCKING

- BONES

- C.G.I.

- CHAT GPT

- CHROMA KEY

- CHROME BALL / GREY BALL

- CLAY RENDER

- CLEAN-UP

- COLOR CHART

- COMPOSITING

- CORPORATE IDENTITY (C.I.)

- CROPPING

- CUTDOWN

- DAILIES

- DATAMOSHING

- DAY FOR NIGHT

- DEEP COMPOSITING

- DEFORMER

- DELIVERY

- DIRTY 3D

- DISPLACEMENT

- DYNAMIC FX

- DYNAMIC RANGE

- E.T.A.

- EDIT DECISION LIST (E.D.L.)

- END OF BUSINESS (E.O.B.)

- END OF DAY (E.O.D.)

- ENVIRONMENT

- EXR

- FLAME

- FOCAL LENGTH

- FRAME

- FRAME RATE

- FTRACK

- FULL CG

- FX SIMULATION

- GAMMA

- GAUSSIAN SPLATTING

- GLAZE

- GLITCH

- GRAIN

- GREEN SCREEN

- GREEN SPILL

- HANDLES

- HIGH DYNAMIC RANGE IMAGE (H.D.R.I.)

- HOUDINI

- IMAX

- IN-CAMERA EFFECT

- INTERLACING

- INTERPOLATION

- ITERATION

- JITTER

- JOINT

- JPEG

- KEY FRAME

- KEYING

- LAYER

- LAYOUT

- LENS FLARE

- LENS GRID

- LETTERBOX

- LIGHTING

- LOCKED CAM

- LOOK DEVELOPMENT

- LOOKUP TABLES (L.U.T.)

- LUMA KEY

- MATCH MOVE

- MATTE PAINTING

- MESH

- MOCK-UP

- MODELING

- MOIRÉ EFFECT

- MOOD

- MORPHING

- MOTION BLUR

- MOTION CAPTURE (MOCAP)

- MOTION CONTROL (MOCO)

- NEURAL RADIANCE FIELDS (NERFs)

- NIGHTSHADE

- NOISE

- NORMAL MAP

- NUKE

- OFFLINE

- ONLINE

- OPAQUE

- PACKSHOT

- PAINTING IN

- PARALLAX

- PHOTOGRAMMETRY

- PICTURE LOCK

- PIPELINE

- PIXELATION

- PLATE

- PLAYBLAST

- PNG

- POINT CLOUD

- POLYGON

- POSTVIS

- PREGRADING

- PREMULTIPLICATION

- PREVIS

- PROJECTION MAPPING

- PROMPT

- QTAKE

- QUESTION

- REAL-TIME

- REFERENCE

- REFRACTION

- RENDER ENGINE

- RENDER PASS

- RENDERING (RENDER FARM)

- RIGGING

- ROTOSCOPE

- SAMPLES

- SENSOR SIZE

- SHADING

- SHOT-BASED

- SIMULATION

- SKINNING

- SLATE

- SPECIAL EFFECTS (S.F.X.)

- SPEED RAMP

- SPLINES

- SQUEEZING

- STITCHING

- STYLEFRAME

- SUBSURFACE SCATTERING (S.S.S.)

- SUPER

- TECHNICAL DIRECTOR (T.D.)

- TEXTURING

- TGA

- TIMING

- TRACKING

- TRANSITION

- TURNOVER

- UNREAL ENGINE

- UPSCALE

- USER INTERFACE (U.I.)

- UV MAPPING

- UV UNWRAPPING

- VECTOR GRAPHIC

- VFX SUPERVISOR

- VIRTUAL PRODUCTION

- VIRTUAL REALITY (V.R.)

- VISUAL EFFECTS (V.F.X.)

- VOLUME

- WARPING

- WIRE REMOVAL

- WIREFRAME

- WORKFLOW

- X-MATRIX

- Y-MATRIX

- Z-MATRIX

A

— /eɪ ˈəʊ ˈviː/

AOV’s, or Arbitrary Output Variables, are additional image data channels or render passes that are generated during the rendering process to enable more precise control over the final image output.AOV’s allow artists to separate different elements of a rendered image, such as diffuse color, specular highlights, reflections, or shadows, into distinct channels, which can then be adjusted or manipulated separately in post-production. This can provide greater flexibility and control over the final image and allow for easier compositing and special effects work.

— /eɪs's/

"ACES" stands for "Academy Color Encoding System." ACES is a standardized color management system developed by the Academy of Motion Picture Arts and Sciences to ensure consistent and accurate color reproduction throughout the entire production and post-production workflow of a film or television project.

— /ˈalfə ˈtʃanl/

Alpha Channel, or Alpha Matte, is an additional channel of information that accompanies a digital image or video. It represents the transparency or opacity of the pixels in the image or video. The Alpha Channel can be thought of as a separate grayscale image that is layered on top of the main image, with white representing areas of the image that are fully opaque, black representing areas that are fully transparent, and shades of gray representing varying levels of transparency. You hear this term when somebody demands an object with a transparent background.

— /ˌanɪˈmeɪʃn/

Animation is a technique bringing a character or 3D model to life. It's done frame by frame, by moving different parts of the character to different positions. 2D Animation refers to the technique of creating the illusion of motion by displaying a sequence of rapidly changing static images.

— /anˌtɪsɪˈpeɪʃn/

This refers to a technique used in animation and visual effects to create a sense of expectation or preparation before a significant action or movement occurs.For example, in a scene where a character is about to jump, anticipation might involve the character crouching down, bending their knees, and shifting their weight before they spring up into the air.

— /əˈpruːvl/

A release that allows you to continue a specific part of your work. For example: Building something in CG is very time consuming and includes many different steps, it’s way more efficient creating a styleframe to get an idea of the final look. As soon as the styleframe is approved everything will be build in 3D.

— /ˈɑːtɪfakt/

An artifact is an undesired element in an image or a video that needs to be removed from as it has a negative effect on the quality of visual content. Artifacts often appear while shooting live-action footage as a side effect of weather conditions (e.g. damage to the camera lens, or when saving a file goes wrong). They can also appear during the rendering process. Artifacts can be used for creative purposes too.

— /ˌɑːtɪfɪʃl ɪnˈtɛlɪdʒ(ə)ns/

AI refers to the simulation of human intelligence processes by computer systems. In the context of VFX, AI can nowadays be used to generate different kind of styles, supporting rotoscoping and cleanup processes, or simplifying a variety of production steps. This book was written with the help of AI too.

— /ˈaspɛkt ˈreɪʃɪəʊ/

The proportional relationship between the width and height of a video or film frame. It is expressed as two numbers separated by a colon, such as 16:9 or 4:3.The first number in the aspect ratio represents the width of the frame, while the second number represents the height. For example, an aspect ratio of 16:9 means that the width of the frame is 16 units and the height is 9 units.

Some common aspect ratios used in video and film production include:

- 16:9 (widescreen): This aspect ratio is commonly used in modern television and video production. It is wider than the traditional 4:3 aspect ratio, providing a more immersive viewing experience.

- 4:3 (standard): This aspect ratio was commonly used in older television and film production. It is more square-shaped than the 16:9 aspect ratio, and may be used for a nostalgic or retro effect.

- 2.39:1 (CinemaScope): This aspect ratio is commonly used in feature films to provide a wide, panoramic view. It is wider than the 16:9 aspect ratio, and is often used for epic or visually stunning productions.

Nowadays more commonly used:

- 9:16 (phone): This is used for Instagram stories, reels and TikTok videos.

- 1:1 (square): Made popular by Instagram and is still used often for this purpose.

- 4:5 (high rectangle): The maximum height for a post in an Instagram carousel.

— /ˈasɛt/

A collective term for all kinds of elements used during a production. Usually it’s used for 3D Models and could be anything like a plane, a tree or even a box. It can also be used for matte paintings, stock footage, 2D Graphics or even brushes.

— /ɔːɡˈmɛntɪd rɪˈalɪti/

AR refers to the integration of digital content into the real world, creating an interactive experience using smartphones, tablets, or other devices equipped with cameras and sensors. AR can be used in VFX to enhance the real-world environment or to create virtual objects that are integrated into real-time application.

B

— /ˈbeɪkɪŋ/

This term refers to a process of pre-calculating or pre-rendering certain elements of a scene or animation and then saving the results to a file for later use. Baking is used to optimize rendering times and improve the overall efficiency of the VFX workflow.

— /ˈˈblɛndə/

Blender is a free and open-source software application from Blender Foundation that allows you to perform a great variety of creative tasks in one place. In Blender, you can perform 3D modeling, texturing, rigging, particle simulation, animating, match moving, rendering, and more.

— /ˈblɒkɪŋ/

In VFX, blocking refers to the process in which a pre-visualization or rough layout is created to plan and stage the action and camera movements in a scene or sequence. This helps the filmmakers to visualize the final outcome and make necessary adjustments before the actual shoot or animation.

— /bəʊns/

In character animation, bones are the digital or virtual joints which are used to pose and control the movement of the 3D or 2D character's parts, such as arms, legs, torso, and head.

C

— /siː dʒiː aɪ/

Computer-generated imagery. As the name suggests, these are the visual elements of your production that are created on a computer. Often used to refer specifically to 3D Computer Animation or as another term for Visual Effects or VFX. CGI and VFX are not the same though. CGI – and its integration – can be considered part of the VFX process.

— /tʃat dʒɪ'pi'tiː/

Chat GPT refers to a conversational model that uses the GPT (Generative Pre-trained Transformer) algorithm. It is an advanced artificial intelligence technology that allows machines to interact and communicate with humans in a natural language format. In simpler terms, Chat GPT is a chatbot or a virtual assistant that is capable of understanding natural language and responding appropriately. For example, the base of all descriptions in our ABC of VFX was written by Chat GPT.

— /ˈkrəʊməˌkiː/

Chroma key refers to a technique used to separate a part of an image from the rest of the content by using a specific color. This color (usually green or blue) can be replaced by different content.

— /krəʊm bɔːl/ — /ɡreɪ bɔːl/

A chrome ball, or mirrored ball, is a sphere that is perfectly round and reflective of all the surrounding light. It is used for visual effects to capture lighting information from set. Watching the chrome ball tells you where the light is positioned on set. A grey ball, or neutral reference ball, is used as a reference tool for lighting and color in a scene. After the shot is captured, VFX artists can use the grey ball to help ensure that the lighting and color of the scene are consistent and accurate. By analyzing the reflection of light on the grey ball, VFX artists can determine the direction, intensity, and color of the light sources within the scene.

— /kleɪ ˈrɛndə/

It is a 3D computer graphics term that refers to the rendering of an object or scene without any shading or texturing applied to it. It is called a "clay" render because the object appears as if it were made of clay, with no surface details visible.

— /ˈkliːnʌp/

It is a post-production term that refers to the process of removing unwanted elements from a shot or footage. It includes removing wires, rigging, tracking markers, or other elements that were visible during the shooting process but not intended to be in the final shot.

— /ˈkʌlə tʃɑːt/

It is a reference tool used in photography and videography to ensure accurate color representation. It consists of a standardized set of colors that are printed on a chart, making it easy for filmmakers and photographers to calibrate their equipment and ensure that the colors they capture are true to life. By comparing the colors in the image to those on the color chart, filmmakers can adjust their settings and ensure that the final product looks as intended.

— /ˈkɒmpəzɪtɪŋ/

The combination of at least two source images to create a new integrated image. Compositing happens when you put all your different ‘elements’ together – your 3D assets, your backgrounds, your particle effects, and your actual on-set footage. It also makes sure all of the elements are combined in the right way to end up with an harmonious picture.

— /ˈkɔːp(ə)rət ʌɪˈdɛntɪti/

The visual representation of a brand or company which includes its logo, color palette, typography, and other design elements that create a distinctive and cohesive image for the company.

— /krɒpiŋ/

Cropping means to cut certain areas of the frame to fit into a specific format. For example: You have to crop when you have a wide rectangular image and need to make it square.

— /ˈkʌtˌdaʊn/

A shorter version of a longer video or film that is created by cutting out the unnecessary or less important content to make it more concise and effective for a specific purpose, such as advertising or social media.

D

— /ˈdeɪlis/

This term refers to a stage of a shot at the end of a day. VFX Artists render the current status so production can make sure everything is on track. It can be seen as a work-in-progress version of a task at the end of a working day.

— /ˈdeɪtə'mɒʃɪŋ/

An intentional glitch effect that occurs during compression or editing of video data. It usually results in the distortion of pixels and colours to create unique and striking visual effects.

— /deɪ fɚ nʌɪt/

This is a grading technique where footage shot during the day is manipulated in post-production to appear as if it was filmed at night. This is often done to save time, costs or to get a specific look or effect.

— /diːp ˈkɒmpəzɪtɪŋ/

This refers to a technique that allows controlling the compositing process in a more detailed way. It’s possible to do even more changes compared to traditional compositing but leads to a large amount of additional data.

— /dɪˈfɔːmə/

This is a tool or effect that can be used in animation and modelling to manipulate the shape or geometry of an object or character.

— /dɪˈlɪv(ə)ri/

This term refers to the final output of a project or sequence, often requiring specific formats or standards for distribution or exhibition.

— /ˈdəːti θriː'diː/

This refers to 3D animation or modelling techniques that use low-quality or unconventional methods in order to create a specific style or look.

— /dɪsˈpleɪsm(ə)nt/

Displacement allows artists to create realistic and detailed surface textures, as well as simulate natural phenomena such as waves or ripples. This technique is used to deform the geometry of 3D models, and works by using a grayscale image (known as a "displacement map") to modify the surface of a 3D model. The brightness values in the displacement map correspond to the amount of displacement applied to the model, with lighter areas being displaced more than darker areas.

— /dʌɪˈnamɪk ˌɛf'ɛks/

Dynamic FX is a broad category of VFX that encompasses a wide range of techniques and tools used to create realistic and visually striking simulations of physical phenomena, such as fire, smoke, water, and explosions.

— /dʌɪˈnamɪk reɪn(d)ʒ/

The range of tones or luminance levels between the darkest to the brightest parts of an image or footage, determining the level of detail and depth of the visual information.

E

— /i:ti eɪ/

ETA stands for "Estimated Time of Arrival." It is a term commonly used to indicate the expected or predicted time when someone or something is expected to arrive at a specific location or destination. ETA is often used in various contexts, for example when someone asks what time they can expect for a file to be delivered.

— /ˈɛdɪt dɪˈsɪʒn lɪst/

A list or file that contains information about the sequence, timing, and details of individual shots used in an edited video, which is used by the post-production team to conform the final timeline accurately.

— /ɛnd ɒv ˈbɪznɪs/

A term used to denote the end of a working day or business hours in a company or organization. Usually between 6 PM and 8 PM.

— /ɛnd ɒv deɪ/

A term used to denote the end of the day, which is at midnight. Often used if something needs to be done at least before the next day.

— /ɛnˈvʌɪrə(n)m(ə)nt/

An environment refers to the digital creation of a setting or location within a movie or television show. This includes everything from the scenery to the lighting and weather effects. VFX artists use a range of techniques such as CGI, 3D modeling, and compositing to create realistic environments that seamlessly blend with live-action footage. Environments can range from fantasy worlds to real-world locations that are modified or enhanced for storytelling purposes. A convincing environment is crucial to immersing the viewer in the story and can be a key factor in the success of a visual effects-heavy production.

— /i:əks ɑːr/

EXR stands for "OpenEXR" which is a high-dynamic-range image file format that is commonly used in visual effects and computer animation. EXR files are capable of storing multi-layer images, 16-bit and 32-bit pixels, and provide a lossless compression, making them a popular choice for compositing, color grading, and other post-production tasks.

F

— /fleɪm/

It is a high-end compositing and finishing software used in visual effects and post-production workflow, which offers advanced tools for color grading, 3D compositing, and visual effects creation.

— /ˈfəʊkəl lɛŋ(k)θ/

Focal length refers to the distance between the center of a lens and its focus point. In VFX, the focal length of a camera lens is important because it affects the way that objects appear in the final image. Specifically, the focal length can impact the perspective distortion, depth of field, and overall composition of the shot.

— /freɪm/

A single image in a sequence of images that make up a video or animation.

— /freɪm reɪt/

It is the rate at which images or frames are displayed per second (fps) in a video or animation, which determines the smoothness and perceived motion of the footage. For example, films are typically shot and projected at 24 fps, while television and online content are often produced at 25 or 30 fps. A higher frame rate generally results in smoother and more fluid motion, while a lower frame rate may appear choppy or stuttering.

— /ˈef træk/

FTrack is a project management and production tracking software that is widely used in the visual effects industry. It is designed to help VFX studios and artists manage their projects, assets, and workflows more efficiently, from pre-production to post-production.

— /fʊl siː dʒi/

Full CG (Computer Graphics) refers to the process of creating visual effects entirely with computer-generated imagery (CGI). In other words, all of the elements in the shot are created using digital tools such as 3D software, without the use of practical effects or live-action footage.

— /ɛf'ɛks ˌsɪm.jʊˈleɪ.ʃən/

Generating visual effects through the use of software simulations. This involves creating a virtual environment, defining the physical properties of objects within that environment, and then simulating how those objects interact with each other.For example, an FX simulation could be used to create the visual effects of a collapsing building, smoke and fire, as well as the behaviour of fluids or fur in a computer-generated environment.

G

— /ˈɡamə/

The relationship between the brightness levels of an image and the amount of light that was used to capture or display it. Gamma is an important concept in VFX because it affects how images are displayed and how they are perceived by viewers.Gamma correction is a technique used to adjust the gamma of an image to match the expected display conditions. This is important because different devices and display systems may have different gamma characteristics, making it difficult to accurately judge their true appearance.

— /ˈɡaʊsɪən splatːɪŋ/

Gaussian splatting is a technique used to efficiently render and visualize 3D point cloud data onto a 2D image or surface. This technique is particularly useful for representing complex and detailed scenes, such as particle systems, volumetric data, or point-based rendering.In Gaussian splatting, each point in the 3D point cloud is projected onto the 2D image or surface using a suitable projection method. At the projected position on the image or surface, a Gaussian distribution or "splat" is generated around the point. The size and shape of the Gaussian distribution are determined by parameters such as the standard deviation or radius, which control the extent of the influence of the point.

The Gaussian distributions from all points in the 3D point cloud are then accumulated or blended together at each pixel or location on the image or surface. This accumulation process results in the final image or representation of the point cloud.

Gaussian splatting is interchangeable with the term "NERF".

/ɡleɪz/

Glaze is a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

— /glɪ́ʧ/

A temporary or random malfunction in a visual effect or digital image, often resulting in a distortion or disruption of the image or effect. Glitches are sometimes used intentionally in VFX as a stylistic choice, such as to create a digital distortion effect, but they can also be an unwanted artifact that needs to be corrected or removed. Glitches can be caused by a variety of factors, such as compression, data corruption, or rendering errors.

— /ɡreɪn/

The individual particles of silver halide in a piece of film that capture an image when exposed to light. Because the distribution and sensitivity of these particles are not uniform, they are perceived (particularly when projected) as causing a noticeable graininess. Different film stocks will have different visual grain characteristics.

— /ɡriːn skriːn/

Literally, a screen of some sort of green material that is suspended behind an object for which a matte is to be extracted. Ideally, the green screen appears to the camera as a completely uniform green field. You use it, to be able to cut out the objects before the greenscreen and set them into a different environment.

— /ɡriːn spɪl/

Any contamination of the foreground subject by light reflected from the green screen in front of which it is placed.

H

— /ˈhandls/

Extra frames at the beginning and end of a shot that are not intended for use in the final shot but are included in the composite in case the shot's length changes slightly.

— /hʌɪ dʌɪˈnamɪk reɪn(d)ʒ ˈɪmɪdʒ/

A technique for capturing the extended tonal range in a scene by shooting multiple pictures at different exposures and combining them into a single image file that can express a greater dynamic range than can be captured with current imaging technology.

— /huːˈdiːniː/

Houdini is a 3D procedural software where you can perform modeling, animation, VFX, lighting, rendering, and more.

I

— /ˈʌɪmaks/

The term IMAX stands for "Image MAXimum", referring to the system's ability to produce extremely high-quality and detailed images that are much larger than those of traditional cinema projection systems.

— /ˌɪn ˈkam(ə)rə ɪˈfɛkt/

In-Camera Effects are special effects achieved exclusively through the use of the camera at the time of recording by manipulating the camera itself or tweaking its basic settings without the use of VFX. Sometimes a combination of both in-camera effects and VFX can achieve the best possible outcome.

— /ˌɪntəˈleɪsɪŋ/

The technique used to produce video images whereby two alternating field images are displayed in rapid sequence so that they appear to produce a complete frame.

— /ɪnˌtəːpəˈleɪʃn/